(Speed of electrons)^2 vs energy. Special Relativity

by A.P. French, MIT Introductory Physics Series, 1968

You can't get particles to go faster than 3x10^8 m/sec regardless of how much energy you give them. The speed of light (in a vacuum) is unaffected by the speed of the source of the light. Everybody who measures the speed of light gets the same value, c = 3 x 10^8 m/sec. Special relativity provides a fundamental understanding of these otherwise totally bizarre experimental results.

Nothing can go faster than the speed of light?

(update 11/18/11)

-- see Appendix 'Neutrinos faster than 'c'?)

How to we

know? Well for one thing, it's an experimental fact. Electrons

are very light, charged particles, so are easily accelerated. It

takes less than a million volts and a few feet to accelerate electrons

up to near the speed of light. Acceleration tests on electrons were done

over 50 years ago up to 15 mev with a van de graph generator and room size

linear accelerator at MIT. The speed of the electrons was determined by

measuring how long it took them to move (in a vacuum) between two points

20 feet apart. At the speed of light the distance moved in one nanosecond

(10^-9 sec) is about one foot (0.3 meter), so moving 20 feet would take

20 nsec, and even 50 years ago time could be measured to 1 nsec resolution

using an oscilloscope. The energy carried by the electrons was measured

by having them hit a barrier at the end of the machine and measuring the

temperature rise.

Let's calculate the speed of the 1 mev electrons from classical (Newtonian) mechanics, which has no 'speed of light' limitation. All we need do is set the kinetic energy at 1 mev and solve for velocity, but because we want to use standard MKS units (length in meters, mass in kilograms, and time in seconds), we first we need to convert ev to joules

Charge of an electron = 1.6 x 10^-19 coulomb

Mass of an electron = 9.1 x 10^-31

kg

1 mev = charge of electron (coulomb) x one million volts

= 1.6 x 10^-19 coulomb x 10^6 volt

= 1.6 x 10^-13 (joule)

E (kinetic energy in joules) = 1/2 x mass (kg) x velocity (meter/sec)^2

solving for velocity

velocity = sq root {2 x E (joule)/mass (kg)}

= sq root {2 x 1.6 x 10^-13(joule)/9.1 x 10^-31 kg}

= sq root 0.35 x 10^18}

= 0.59 x 10^9 meter/sec

= 5.9 x 10^8 meter/sec

Our calculated speed of 5.9 x 10^8 meter/sec is about twice the speed of light (3 x 10^8 meter/sec) in a vacuum. Is that what is measured? No! At 1 mev the measured elctron speed is about 2.83 x 10^8 m/sec), which is 94% of the speed of light. In fact the measured speed came out less 3 x 10^8 m/sec at 15 mev and always comes out less than 3 x 10^8 m/sec regardless of how much energy you give the electrons. This is a very remarkable result!

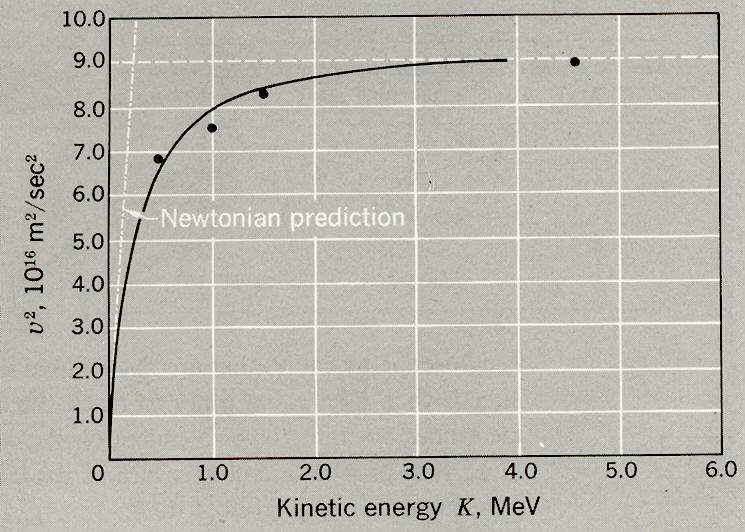

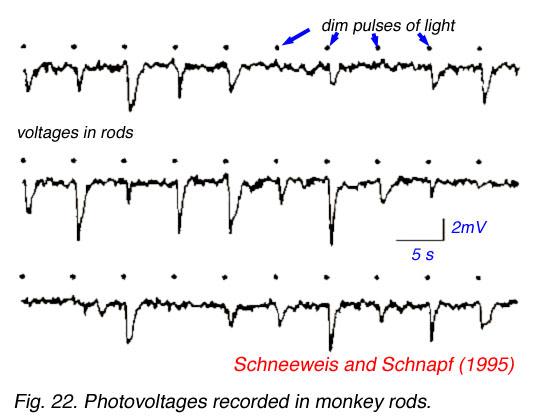

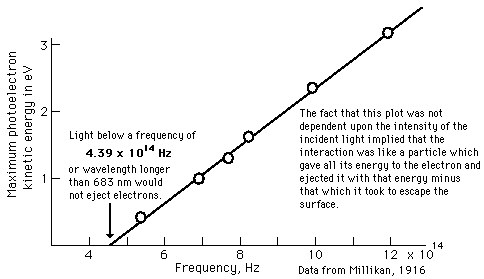

Since kinetic energy in classical mechanics is proportional to velocity squared, if you plot velocity vs sq root {energy} or plot velocity squared vs energy, you get a straight line. Here is the measured electron speed vs energy taken 50 years ago (plotted as speed squared vs energy). The diagonal white line to the left is the classical speed prediction. Our calculated 5.9 x 10^8 m/sec for 1 mev electrons squared is 35 x 10^16 (m/sec)^, so it is above the maximum of this graph.

(Speed of electrons)^2 vs energy. Special Relativity

by A.P. French, MIT Introductory Physics Series, 1968

The plot clearly shows that as energy increases the speed increases get less and less with the speed gradually (asymptotically) approaching a hard speed limit. The plot's asymptote limit is labeled 9 x 10^16, the square of 3 x 10^8 m/sec, usually represented as c, the speed of light in a vacuum.

It turns out the hard speed limit we measure for the electron is found for any particles and any amount of energy! What is going on here? Well, Einstein (with help from Maxwell) figured it out a century ago, and he figured it out before the acceleration experiments were run!

Riff on electrons in a radio tube --- In tubes used in old radios electrons are 'boiled' (thermally excited) off the cathode and accelerated through the vacuum in the tube to the positively charged plate. The plate is typically about 100 V above the cathode, so during the acceleration the electrons pick up about 100 ev of energy. Guess how fast the electrons are going when they hit the plate?Proof that speed of a light source does not change the speed of lightWell, we can figure the answer in our head --- Kinetic energy goes as vel^2, so vel goes as sq rt (energy). 100 ev is 1 Mev divided by 10^4, so scaling from the 1 Mev example above, the vel in the tube must be (200% speed of light)/sq rt (10^4) = 200% c/100 = 2% speed of light. Yup, in the typical radio tube with a measly 100V electrons are accelerated in one inch to 2% of the speed of light, or 6 x 10^6 m/sec (3,700 mile/sec)!

Check

100 ev =?= 1/2 x m x vel^2

10^2 volt x 1.6 x 10^-19 coulomb =?= 0.5 x 9.1 x 10^-31 kg x [6 x 10^ 6(m/sec)]^2

1.6 x 10^-17 =?= 164 x 10^-19

1.6 x 10^-17 = 1.6 x 10^-17

Observations of a huge number of binary star systems are consistent with normal elliptical orbits that we can calculate very accurately. This means the light from the approaching and receding stars must be traveling to us at exactly (or almost exactly) the same speed. This is strong experimental evidence that the speed of light is independent of the speed of its source.

Questions about 'speed of light'

A bunch

of very interesting questions come immediately to mind when hearing of

the speed of light speed limit.

Why 3x10^8

m/sec?

Is it

independent of other physics parameters?

Can

it be calculated?

Why

is the speed of light slower in materials?

Why 3x10^8 m/sec?

3 x 10^8 m/sec

is the measured value of the speed of light. There is no (generally accepted)

theoretical reason why it is should be this value, it just is this value.

There are a bunch of constants in physics like this that are measured and

cannot be derived, for example the gravitational constant. A lot of physists

are not too happy about this and for a long time have been working to try

and better understand why these constants take on the values they do.

Is c independent of other physics parameters?

Answer: no. The

speed of light (c) shows up everywhere in fundamental physics equations.

The speed of light (c) is known as a universal constant, as is planck's

constant (h) and the gravitational constant (G). In fact these three universal

constants can be uniqely combined to give fundamental units of time, length,

and mass, known as Plank units, favorites of particle physicists.

planck length

sqrt{hG/c^3}

1.6 x 10^-35 m

planck mass

sqrt{hc/G}

2.2 x 10^-8 kg

planck time (1/c) x planck length

=sqrt{hG/c^5} 5.4 x 10-44 sec

Consider also

(1)

c = 1/sq rt {e0 u0}

(2)

Z0 = u0 x c = sq rt{u0/e0}

(3)

E = m x c^2

(4)

alpha = e^2/(2h x e0 x c)

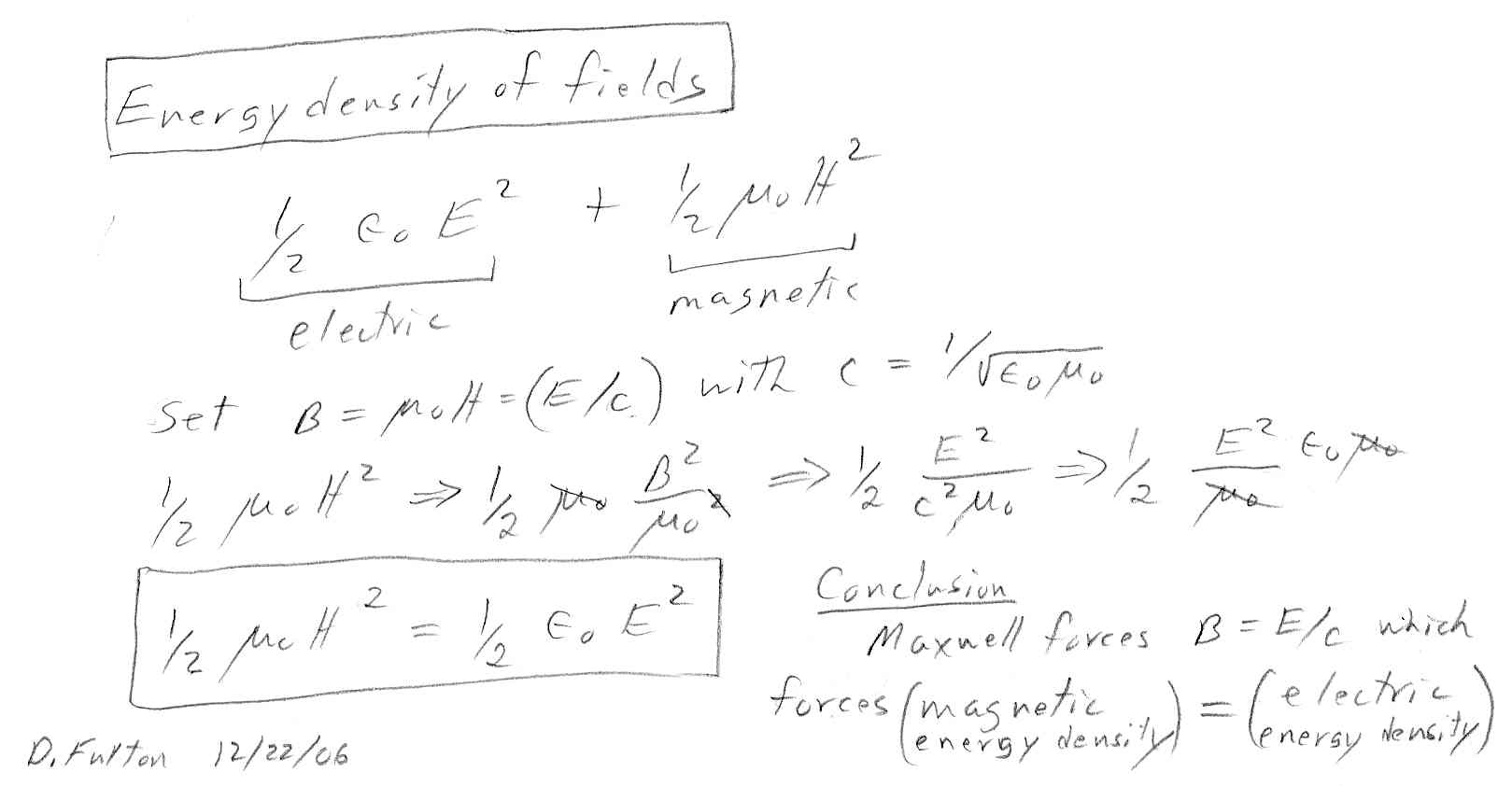

Equation (1) says that the speed of light can be calculated from two constants of the vacuum: one (e0) is an electric-capacitive scaling constant and the second (u0) is a magnetic-inductive scaling constant. The speed of light is the inverse of their geometric mean.

e0 (electric permittivity) is a constant that scales the electric (E) field

squared

(in a vacuum) to give the energy density stored in the electric field.

Energy density in electric (E) field = 1/2 x e0 x E^2

u0 (magnetic permeability) is a constant that scales the magnetic (H) field

squared

(in a vacuum) to give energy density stored in the magnetic field.

Energy density in magnetic (H) field = 1/2 x u0 x H^2

Equation (2) is a universal constant known as the characteristic impedance of free space (Z0). It is the square root of the ratio of u0 to e0 and has the units of ohms. The value is about 377 ohms and is known very accurately (12 digits). Since ohms in circuit theory are normally volts/amps (and E has units of volt/meter), Z can also be expressed as a ratio of the electric to magnetic fields (E/H) in a vacuum.

Equation (3) is the famous Einstein equation that says the energy and mass are (in some way) equal. c^2 is the (fixed) proportonality constant that scales mass to energy and energy to mass..

Equation (4) is the formula for the fine structure constant, usually designated by alpha. Alpha is a constant of the stamdard model of particle physics (one of 19) that is unusual because it is dimensionless. In quantum electrodynamics the fine structure constant is a coupling constant that indicates the strength of the interaction between electrons and photons. It is also involved in various estimates of the size of the electron. Alpha is equal to the charge of the electron squared divided by 2h (twice plank's constant) x e0 (electric permittivity in a vacuum) x c (speed of light in a vacuum), and its value has been very accurately measured.

Can c be calculated?

Answer: historically,

yes. Maxwell around the time of the US civil war had a eureka moment when

he found in his equations summarizing the laws for electicity and magnetism,

laws based on a huge number of experiments by Faraday & others, that

a self-sustaining electro-magnetic wave was possible. He found he was able

to calculate its speed, and it turned out to be just a combination of two

constant that can be measured in lab experiments with electrcity and magnetism.

When he did the calculation, he found that the speed came out to be 3 x

10^8 m/sec that he knew (even in the 1860's!) was the speed of light. Yikes

(holy shit!), he suddenly realized that light, which had been a

mystery for centuries, was very likely an electromagetic wave (as

Faraday had long suspected).

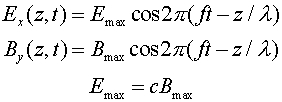

His equation for the speed of light turned out to be very simple {c = 1/sq rt (eo x u0)}. Speed of light is equal to the inverse of the geometric mean of two constants (e0, u0). One constant (e0) comes from the formula for capacitance (C), where energy is stored in the electric field, and the other constant (u0) comes from the formula from inductance (L), where the energy is stored in the magnetic field. Each constant multiplied by its field squared (E^2 or H^2) yields the energy stored in the field (per unit volume).

A simple (idealized) capacitor is just two plates of area A, separated by distance d. When a voltage is applied between the plates, a (uniform) electric field (E) exists between the plates, and energy is stored in the electric field. A simple inductor is a (circular) toroid of length d, made with N turns of wire, each turn having cross-sectional area A. When a current is flowing through the toroid, a (uniform) magnetic field (H) exists inside the toroid, and energy is stored in the magnetic field.

C = e0 x A/d

L = u0 x N^2 x A/d

or L = u0 x (N/d)^2 x A x d

where

A = area of plates

A = area of each coil in toroid

d = separation of plates

d = length (average circumference) of toroid

N = number of coils in toroid

Energy stored in the electric field of the capacitor = 1/2

e0 x E^2 x A x d

Energy stored in the magnetic field of the inductor = 1/2 u0 x H^2

x A x d

Do you see a symmetry here?

Why is the speed of light slower in materials?

One explanation

I have seen is light slows down in materials because light photons are

constantly being absorbed and reemitted. 'Explanations' like this are useless,

photons in the presence of materials are being absorbed and emitted all

the time.

Speculation on what sets speed of light in vacuum

There

are suggestions and hints that the speed of light (and e0) may be determined

by the properties of the vacuum. In particular, the electric field of light

is slowed down (probably from infinity), because as it travels it has to

continually do (reversible) work polarizing charged virtual particles of

the vacuum (electrons and positrons).

Polarization of vacuum virtual particles could be analogous to the polarization of a dielectric material in a parallel plate capacitor or a cable. Bound electrons in atoms and molecules of the dielectric material are somewhat like little springs. An applied E field does (reversible) work on these bound electrons, slightly displacing them relative to the positive nucleuses, causing the applied E field to be partially canceled. This mechanism increases the stored electric energy, and since it takes time to do work (add energy) dielectrics in cables slow down signal propagation.

Maxwell's equation for a traveling wave require that the energy in the E and H fields remain balanced. When dielectrics increase e, the E amplitude automatically drops such that the electric field (photon) energy does not change, thus keeping the balance between electric and magnetic energy. Therefore u0 is secondary, it's principally eo (or e) that sets the speed of light in a vacuum or in a material..

The suggestions and hints (see below) are all I can find. I can find no value for the bare or naked electron charge. I can find no analysis that says the speed of light is, or is not, affected by virtual particles of the vacuum. What this probably means is the effect of the vacuum on the speed of light and the electron charge is not calculable. Physicists (pretty much) only write up what they can calculate.

Consider:

-- Particle physicists argue that inside a normal electron is a (so

called) 'naked' or 'bare' electron with a higher charge. The argument is

that virtual particles of the vacuum form a cloud around the electron that

is polarized, meaning that in their brief existence positively charged

particles are pulled slightly closer and negatively charged particles pushed

slightly away. Particle physicist Dan Hooper in his 2006 book Dark Cosmos

says this:

During their brief lives the positively charged particles in this quantum sea will be pulled slightly toward the electron, forming a sort of cloud around it. The cloud of positive particles conceals some of the strength of the electron's field, effectively weakening it. (Dark Cosmos, 2006,p 93-94)-- Recent high energy probes of the electron have tended to confirm the virtual particle shielding of the electron E field. The fine structure constant, which is (e^2/h x c), was found to be about 7% higher when part of the virtual shielding cloud was penetrated. This is an increase in q of 3.5 %. Here is an NIST reference:

The virtual positrons are attracted to the original or "bare" electron while the virtual electrons are repelled from it. The bare electron is therefore screened due to this polarization. Since alpha (fine structure constant) is proportional to e2, it is viewed as the square of an effective charge "screened by vacuum polarization and seen from an infinite distance." (NIST, National Institute of Standards and Technology web site)-- About 15 years ago several prominent scientists writing in reputable journals, including the world's most famous journal (Nature), speculated that virtual particles of the vacuum affect the speed of light and suggested this is "worthy of serious investigation by theoretical physicists".At shorter distances corresponding to higher energy probes, the (virtual) screen is partially penetrated and the strength of the electromagnetic interaction increases since the effective charge increases. Indeed, due to e+e- and other vacuum polarization processes, at an energy corresponding to the mass of the W boson (approximately 81 GeV, equivalent to a distance of approximately 2 x 10^-18 m), alpha is approximately 1/128 compared with its zero-energy value of approximately 1/137. Thus the famous number 1/137 is not unique or especially fundamental. (NIST, National Institute of Standards and Technology web site)

The vacuum is not an empty nothing but contains randomly fluctuating electromagnetic fields and virtual electron-positron pairs with an infinite zero-point energy. Light propagating through space interacts with the vacuum fields, and observable properties, including the speed of light, are in part determined by this interaction. ... The suggestion that the value of the speed of light is determined by the structure of the vacuum is worthy of serious investigation by theoretical physicists (S. Barnett, Nature 344 (1990), p.289)Scharnhorst and Barton suggest that a modification of the vacuum can produce a change in its permittivity [and permeability] with a resulting change in the speed of light. The role of virtual particles in determining the permittivity of the vacuum is analogous to that of atoms or molecules in determining the relative permittivity of a dielectric material. The light propagating in the material can be absorbed , but the atoms remain in their excited states for only a very short time before re-emitting the light. This absorption and re-emission is responsible for the refractive index of the material and results in the well-known reduction of the speed of light. (S. Barnett, Nature 344 (1990), p.289), also (later) Barton, K. Scharnhorst, Journal of Physics A: Mathematical and General 26 (1993) p 2037-2046)

Modifications of the vacuum that populate it with real or virtual particles reduces the speed of light photons. (J. I. Latorre, P. Pascual and R. Tarrach, Nuclear Physics B 437 (1995), p.60-82)

As a photon travels through a vacuum, it is thought to interact with these virtual particles, and may be absorbed by them to give rise to a real electron-positron pair. This pair is unstable, and quickly annihilates to produce a photon like the one which was previously absorbed. It was recognized that the time that the photon's energy spends as an electron-positron pair would seem to effectively lower the observed speed of light in a vacuum, as the photon would have temporarily transformed into subluminal particles. (Wikipedia on Scharnhorst)

Fundamental to both approaches is the theoretical picture of the vacuum as a turbulent sea of randomly fluctuating electromagnetic fields and short-lived pairs of electrons and positrons (the antimatter counterparts of electrons) that appear and disappear in a flash. According to quantum electro-dynamics, light propagating through space interacts with these vacuum fields and electron-positron pairs, which influence how rapidly light travels through the vacuum. (comment on a 1990 Barton, K. Scharnhorst paper in Science News, 1990)Mystery of photonsLight in normal empty space is slowed by interactions with the unseen waves or particles with which the quantum vacuum seethes. (Encyclopedia of Astrobiology, Astronomy, & Spaceflight -- David Darling)

John Wheeler, the well known physicist who coined the term 'black hole', said of photons (in reference to single photons that apparently interfere with themselves in double slit experiments) --- A photon is a ''smoky dragon'. It shows it tail (where it originates) and it mouth (where it is detected), but elsewhere there is smoke: "in between we have no right to speak about what is present."

In the well regarded, 1,200 page, 1995, book , Optical Coherence and Quantum Optics by Mandel and Wolf, you find stuff like this:

--- "The one-photon state must be regarded as dstributed over all space-time." (p 480) Well that's sure clear. A photon is everywhere both in space and in time. Yikes!In a generalize way this book derives the time equations of the free E and H fields, and (surprise) it turns out to be exactly Maxwell's field equations.--- "We may regard the corresponding photon as being approximately localized in the form of a wave packet centered at (a known) position at a given time." (p 480) "However, in attempting to localize photons in space we encounter some fundamental difficulties."(p629). So a photon is a wave packet, but it's difficult to locate exactly.

--- "The field (of a photon) is defined in terms of its effect on a test charge, which implies an effect averaged over a finite region of space and time." (p 505). This is the heart of the matter -- What we really know about a photon is how it interacts with an electron (test charge).

--- "The counts registered by a (photo) detector whose surface is normal to the incident field and exposed for some finite time (delta t) are interpreted most naturally as a measurement of the number of photons in a cylindrical volume whose base is the sensitive area of the detector and whose height is c x (detla t)" (p629). In other words a natural interpretation of photons coming into a detector is that they are like rain drops falling to the ground. (This is an analgy that Feynman makes in his introductory video lecture on photons.)

The challenge, which many people have taken up, is to find a picture or model of a photon in space that fits with Maxwell's equations and the experiments underlying quantum physics. Online you can find lots of speculative self-published technical writing about photons.

My approach to getting a handle on light photons is to analyze and picture how E and H fields propagate down cables. In real cables signals move a little slower than the speed of light because of the properties of the materials of the cable, mostly the slow down is due to the extra capacitance introduced by the insulating material between the conductors. In an ideal, lossless cable (technically a transmission line or waveguide) the space between the conductors is air (or a vacuum). This allows the signal to propagate at 3 x 10^8 m/sec, the speed of light in a vacuum. Also the conductors are considered ideal with zero resistance.

Are all traveling electromagnetic fields (including those in cables) quantized? How close are cable E and H fields to photons? Who knows. But there does appear to be a lot of similarities in the cable E & H fields and known properties of light:

* fields travel at the same speed (3 x 10^8 m/sec)

* fields are transverse to the direction of motion

* fields are in spacial quadrature (i. e. fields are at right angles to

each other)

* fields are in time phase (i.e. E and H transition at a point in space

at the same time)

But there are differences too. The cable E field picture does not seem to fit with the known polarization of light. Polarization is pictured as the direction light photon E field arrows point. Materials known as polarizers will pass or rotate light with E fields in only one direction (say up). But in a cylindrical cable the propagating E field looks like a ring of arrows point out (or in), like a mixture of all polarization.

One key difficulty with photons is how to picture the E fields. E fields terminate (end) on charges or a conductor. In a cable the E fields is constrained by the geometry of the two conductors. What does the E field look like in space? People wave their arms that the photon geometry must somehow be spherical (or cylindrical). Or they speculate the vacuum throw up some sort of virtual charge cloud that terminates the photon E field.

Another difficulty is what is the meaning of the frequency when applied to the photon 'particle'. No one seems to agree on how long or how many cycles (if any!) a photon has. Quantum mechanics says the energy of the light photon is proportional to its frequency (E = plank's constant x frequency). My thinking on this is shaped by the cable analogy.

In the cable there is no natural frequency or natural sinewave. My analysis of the cable uses an electrical engineer's favorite waveform, the 'step', which is just an instantaneous change in voltage, to drive (excite) the cable. Engineers know this waveform is often more revealing of the properties of a system than the use of sinewaves, which is all the physicist seem to use. In the ideal lossless cable the shape of the voltage (& current) at any point in the cable is exactly the same (except, of course, delayed in time) as the shape voltage (& current) that was applied externally to drive the cable. In other words the cable signal 'frequencies' are determined totally by the source of the signal.

Therefore by analogy with the cable it seems likely to me that the 'frequency' of a photon is determined entirely by the source of the photons. Radio photons, coming from radio antennas, (it seems to me) must have very slowly changing E and H fields (relative to light). Periodically no energy is sent from the antenna and this is why periodically when light E and H fields are plotted they both simultaneously go to zero. At times no (light) energy is in the fields, because no energy was sent.

Signals in a cable as a analog for light in a vacuum

An

electric signal travels down a cable in much the same way light travels

through a vacuum. Studying the details of how a signal propagates in cable

can provide a lot of insight about how light moves both in a vacuum and

in materials. A cable is something you can touch and measure. With a good

oscilloscope you can 'see' how the voltages and currents change as a signal

goes down the cable. It's possible to make circuit models of the cable

that can be analyzed with circuit analysis programs that can can confirm

and extend the test data. Also it's relatively easy to work out the capacitive

(E) and inductive (H) equations of a cable because the geometry is so simple.

Combine these approaches and you can quantitatively and visually

see how a signal moves down a cable, how the E and H fields move. From

there it is but a small step to understanding light in materials..

Studying propagation in a long cable has some nice advantages. One, a cable is a simple geometry with easily measured and calculable electrical parameters inductance (L) and capacitance (C). Two, a cable can be approximated by a repeated simple circuit (series LC) that allows circuit designers (like me) to understand it and calculate the speed using well known circuit design techniques. Three, application of Maxwell's equations to the simple geometry of cylindrical cables is easy, so the speed can be calculated from the electrical parameters of the cable and sketches can be made of how the E and H vectors point as the signal propagates. This approach also is helpful in understanding polarization. The cylindrical geometry of a round cable provides understanding of unpolarized signals, and when extended to an idealized flat cable provides understanding of polarized signals.

Typical cable numbers

As a motor

control engineer, the propagation of electrical signals down cables from

the controller to the motor is something I have often measured and know

something about. Consider a motor connected through a 100 feet cable to

a box of electronics called a motor controller. When the transistors in

the motor controller switch the voltage, which can be very fast (20-50

nsec), there is typically a delay of about 220 nsec before the motor

'knows' the voltage has changed. This 220 nsec delay is the time it takes

the signal at 2.2 nsec/ft to travel down the 100 ft of the cable. (Footnote:

it doesn't matter that the cable might be curled up.) 1 nsec/ft is the

speed of light in a vacuum, so 2.2nsec/ft is about 45% the speed

of light in a vacuum. Signals in a cable travel slower than light in a

vacuum for the same reason that light in glass travels slower.

Measured inductance

and capacitance for a foot of motor cable (consistent with 2.2 nsec/ft)

are typically

L = 50 nh/ft

C = 100 pf/ft

The

delay equation is

delay/unit length = sqrt (LC)

= sqrt (50 x 10^-9 nh/ft) x 0.100 x 10^-9 nf/ft)

= sqrt (5) nsec/ft

= 2.2 nsec/ft

Circuit model of the cable

Inductors

(L) and Capacitors (C) are idealized circuit elements that losslessly store

and release energy. An inductor stores energy in a magnetic (B) field and

a capacitor stores energy in an electric (D) field. Energy stored in an

inductor is proportional to its inductance, and energy storage in a capacitor

is proportional to its capacitance. When you connect an L and C together,

they affect each other in such a manner that energy sloshes back and forth

between them sinusoidally. The time it takes for the energy to slosh from

one to the other is proportional to LC time constant {tau =sq rt (LC)}.

Measured inductance and capacitance for a foot of motor cable are typically (about) L=50 nh and C=100pf. A cascade of a hundred of these 'one foot' LC circuits models a 100 foot cable. The 'one foot' LC time constant (tau), which for series L and parallel C is a delay time, is calculated below and comes out to be 2.2 nsec, a little less than half the speed of light

tau = sq rt (LC) = sq rt (50 nh x 100 pf)

= sq rt (50 x 10^-9 x 100 x 10^-12)

= sq rt (5,000 x 10^-21)

= sq rt (5 x 10^-18)

= 2.2 x 10^-9 sec

(Another characteristic of the cable depends on L.C, its

impedance. Cable impedance (Z) is sq rt (L/C)

Z = sq rt (50nh/0.1nf) = sq rt (500)

= 22 ohms

For this cable electrical signals travel at 45% the speed of light in a vacuum. The time constant (tau) of our one foot LC model turns out to be exactly the time its takes the signal to travel one foot in the cable. A cable with the same magnetic energy storage (L) and four times the electric energy storage (4C) (due to a high capacitance dielectric material between the two conductors) would propagate signals twice as slowly (22.5% speed of light).

Holy shit --- speed of light from static measurements

Note

this interesting fact --- Two statically (nothing moving) measured

cable parameters, inductance, which is proportional to magnetic field energy

storage, and capacitance, which is proprtional to electric field energy

storage, allow us to accurately predict (i.e. to calculate) the speed of

travel of electrical signals down the cable.

It turns out that the same type of prediction can be made for the speed of radiated electromagnetic waves, like light. This was the euraka (holly shit!) moment for Maxwell in the 1860's when he found he could tie electricity/capacitance together with magnetism/inductance in a wave equation, and it yielded a single speed (through a vacuum) for all traveling electromagnetic waves (light, x-rays, etc).

Maxwell wrote in 1864:

This (electromagnetic wave) velocity is so nearly that of light that it seems we have strong reason to conclude that light itself (including radiant heat and other radiations) is an electromagnetic disturbance in the form of waves propagated through the electromagnetic field according to the electromagnetic laws.Precursor to Maxwell -- Faraday rotator (2/11)

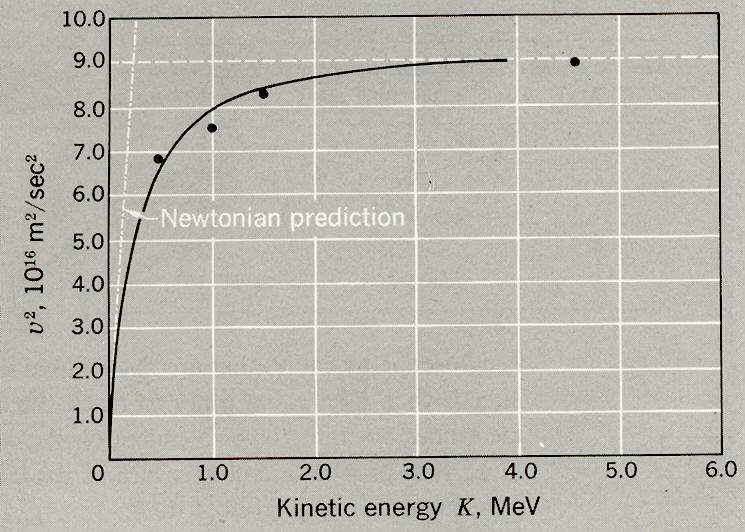

beta angle =( Verdet constant) B d

(transparent) material effect

source -- Wikipedia 'Faraday effect'

The Faraday effect (or Faraday rotator) is a rotation of the angle of linearly polarized light caused by a an axial DC magnetic field in the path. The degree of rotation depends on the strength (& polarity) of the B field and the length of the path. Looks like this is only(?) a material effect, but it happens too in outer space caused by free electrons. Frankly I don't have a clue as to how to think about this (well see below).

Here's a thoughtThe non-mathematical explanation given in Wikipedia is that linear polarized light can be thought of as two counter rotating circular polarizations and that the DC magnetic field changes the speed of rotation (refractive index) of the two of them differently causing a shift in the linear polarization angle.

Elsewhere in this essay by analogy with light travel in a cable I argue you can think of light slowing down in a material as being due to a 'capacitance' increase, or more energy (and time) needed for the E field to move the electrons of the atoms of the material. If the slightly moving electrons are thought of as a 'current', then by the right hand rule (I cross B) a rotational force is exerted on the moving electrona in such a way that it might very well speed up a circularly polarized E field going one way and retard a circularly polarized E field going the other way.

Still the important point historically is in 1845 Faraday knows a magnetic field can change light, so clearly they interact, and it does suggest that maybe they interact because light itself is electromagnetic.

Speed of light from static forces

Meters that

measure capacitance and inductance have a system of units built into them,

such that capacitance is read in uf and inductance in uh. When the speed

of light is derived from measurements made with capacitance and inductance

meters, it is not immediately obvious what role the scale factors built

into the meters play. There is another way to statically measure

the speed of light that makes it clear that units don't matter.

Here's the equation for the force between charges (q1 and q2) separated by r (coulomb's law), and the equation for the force (per unit length) between parallel wires (carrying currents I1 and I2) separated by r. Since c = sqrt(1/e0 u0), we can replace u0 in the equation with (1/e0 c^2).

Force = k x q1q2/r^2

where k = 1/(4 pi e0)

Force/(unit length) = k x I1I2/r

where k = u0/(2 pi) = 1/(2 pi e0 c^2)

From above you can see that the speed of light can be determined from the ratio of measured electric and magnetic forces, e0 drops out. As Feynman points out, this measurement of c is also not dependent on the definition of charge. If q were to double, then both forces quadruple (current doubles because it is charge flow per sec), so the ratio is unaffected. Units of distance (r) don't matter either because the measurements can be made at the same distance or at ratioed distances.

Interpreting the LC slow down

Here is a

physical interpretation. Inductance slows down the rise of current as energy

flows into the magnetic field around the wires. Capacitance (transiently)

pulls or steals current out of the wire to build up the electric field

in the insulator between the wires as the voltage rises. (See section of

Displacment current.) Both mechanisms, the retarding of current and

diversion of current, slow down the propagation of the signal down the

cable.

Light slows down in transparent materials for the same

reason

Very likely

the reason for the slow down of light in transparent materials is the same

as in the cable. It is due to the need to repeatedly pump energy into and

out of the materials magnetic and electric fields. In fact by analogy with

the cable I predict (I do not know as I write this) that the speed of light

in transparent materials will be proportional to 1/sq rt (e x u) , where

e is the ratio increase in eo (electric constant) in materials and u is

the ratio increase in uo (magnetic constant) in materials.

Yup, here are the formulas for index

of refraction as a function of the electric and magnetic parameters of

the material. The speed of light in materials is the speed of light in

a vacuum (c) divided by the index of refraction (n).

speed of lignt in materials = c/n.

The index of refraction is given in terms of the relative electric permittivity (er) and relative magnetic permeability (ur). (ke is called dielectric constant and km is called the relative permeability.

n = sq rt (er x ur)

er= ke x e0,

ur=km x u0

e0 = 8.8542 x 10^-12 F/m u0 = 4 pi

x 10^-7 H/m

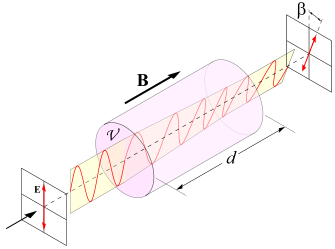

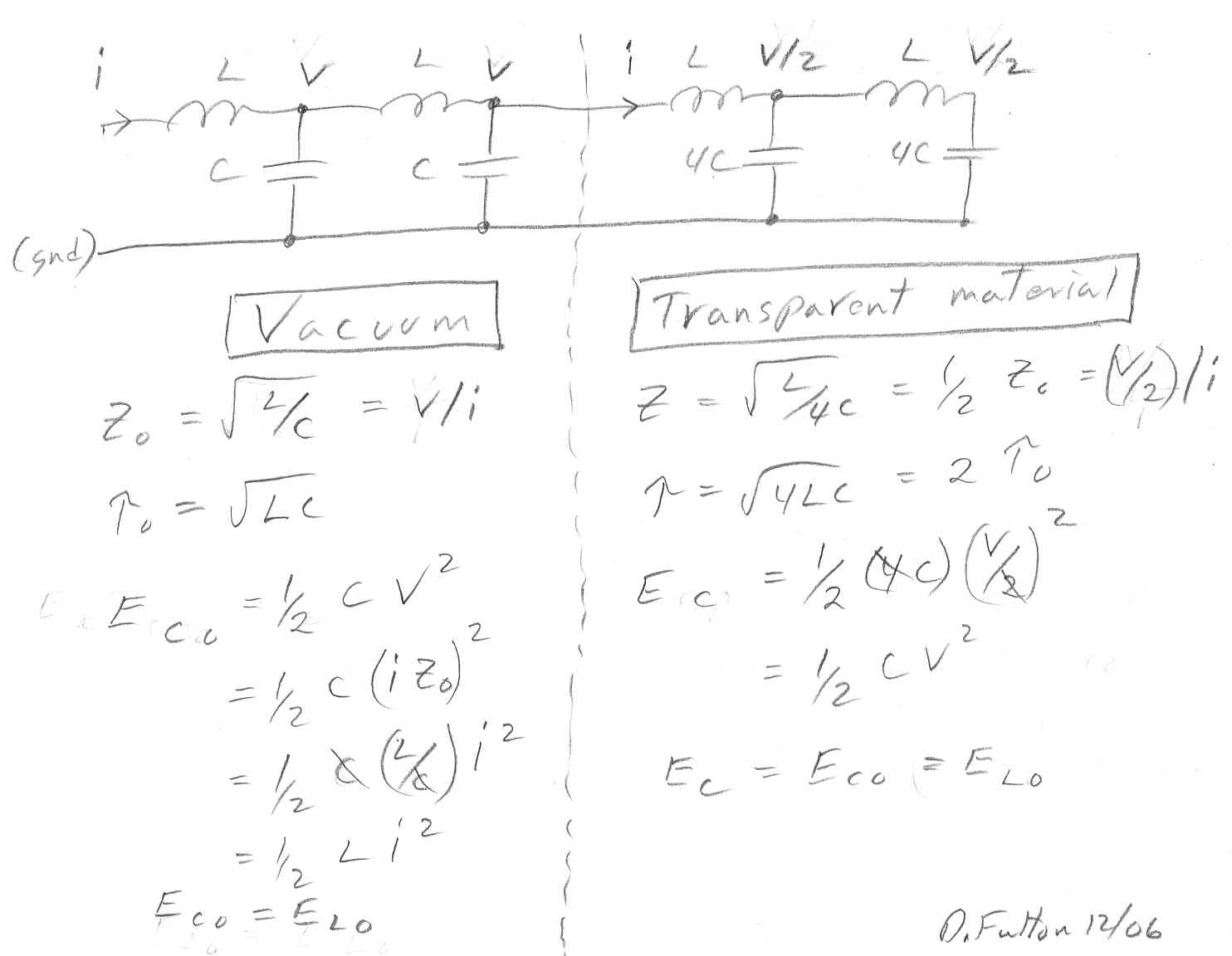

LC ladder model for light entering a material

The LC ladder

model of a cable can be extended to (I think) shed some light on details

of what happens when light goes from a vacuum into a transparent material.

Light travels more slowing in materials because the electrons in the material

raise the e (electrical permittivity) higher than the vacuum. In the sketch

below the transition of light going from vacuum into a transparent material

is modeled by increasing the capacitance (C) by four.

As the sketch shows four times higher capacitance doubles the time constant per unit length (sqrt{LC}) and also lowers the impedance (Z = sqrt{L/C}) by two. A doubled LC time constant means it takes twice the time to travel the per unit LC length, in other words the speed of light has been cut in half. A key point to note is that the energy in the capacitor across the transition remains unchanged, and it stays equal to the energy in the inductor. When the capacitance increases by x4, it causes Z to drop in half which causes the voltage to drop in half. The result is that capacitor energy (1/2 x C x V^2) is unchanged, the lower voltage compensating for the increased capacitance.

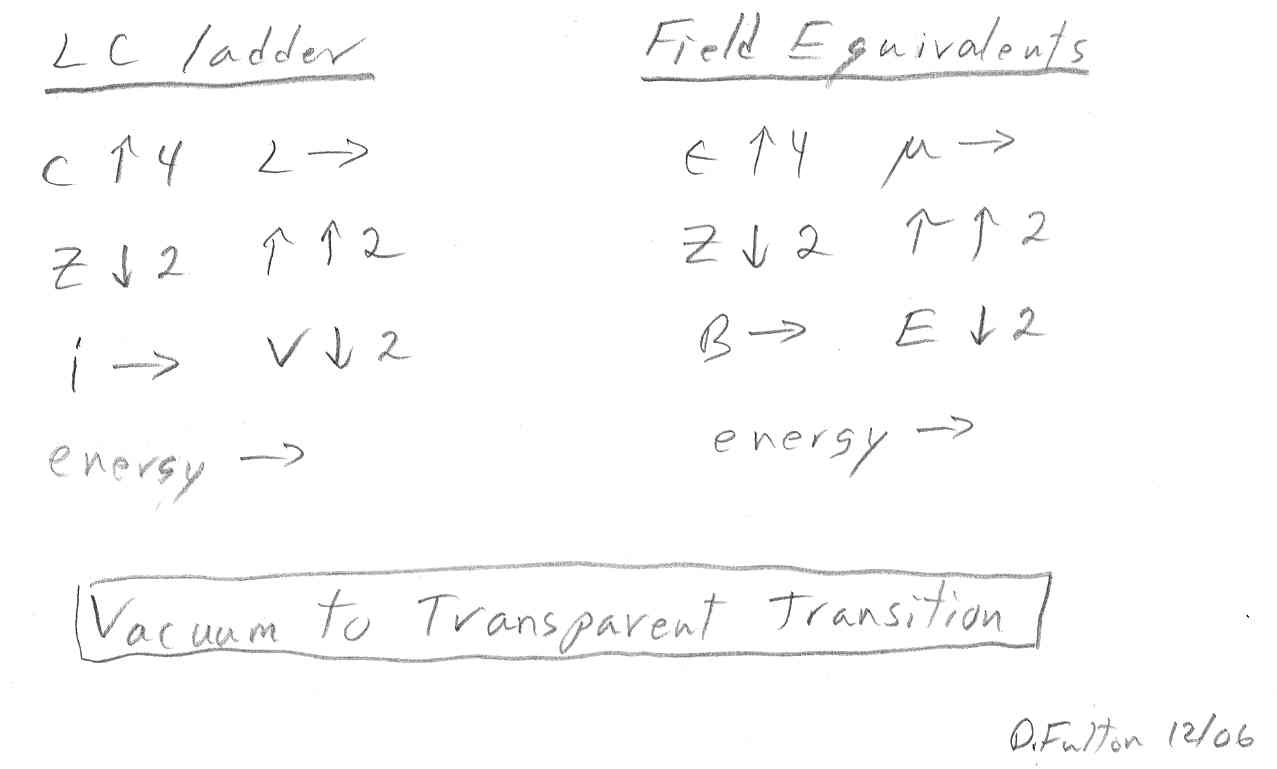

The LC ladder parameters are translated into the field equivalents for light below:

In summary the LC ladder model is telling us (predicts) that when light enters a material from a vacuum it slows down due to the higher permittivity. At the field level the E field drops because the impedance of the material is lower than the vacuum with H (and B) in the material the same as in the vacuum. The drop in E just compensates for the increase in permittivity keeping the E field energy unchanged and the same as the H field energy. The energy balance between electric and magnetic fields, which is a requirement for propagation of the wave, is maintained.

Another (interesting/curious) result of this analysis is that the (semi-classical) 'size' of a photon in a material is the same as in vacuum. The energy stored in the electric field is the electrical energy density (1/2 x e x E^2) intergrated over the volume of the field. Since E is down by two when e is up by four, it must mean the field volume, i.e. the spacial extent of the photon E field, is the same in material as in vacuum!

Capacitor with a dilectric material in the gap

The classic

textbook capacitor is two parallel plates (close together) with air between.

Inserting a dielectric, insulating material in the gap can increase the

capacitance x3-4, so almost all practical capacitors use dielectrics

in the gap. A dielectric increases the electric permittivity (e = ke x

e0) of the capacitor by making ke > 1. A higher electric permittivity and

higher capacitance means more energy is stored in a capacitor for a particular

voltage. The circuit formula for energy storage in a capacitor is the first

formula below. The energy in a capacitor is all stored in the electric

field, so the capacitor energy formula can also be written in terms of

the electric field density and volume of the gap..

E=1/2 x C x V^2

E=1/2 x ke x e0 x E^2 x (volume in gap)

The relationship in a capacitor between charge, voltage and capacitance is q = CV, which can be written

C = q/V = ke x e0 x (area of plates)/(gap between plates)

So when a material is inserted in the gap, the capacitance (C) goes up, charge on the plates (q) goes up, but for a specific voltage (V) the (net) E field (volt/m) in the gap of the capacitor is unchanged. The reason the charge on the plates (q) can go up without the (net) E field in the gap going up is because a partially cancelling E field is formed by electrons in the material as their (dipole) orientation (or charge displacement) is affected by the external E field.

Link below has a little sketch showing charge on a capacitor plates and its dielectric. It's text says:

"The electric field causes some fraction of the dipoles in the material to orient themselves along the E-field as opposed to the usual random orientation. This, effectively, appears as if negative charge is lined up against the positive plate, and positve charge against the negative plate."http://www.pa.msu.edu/courses/2000spring/PHY232/lectures/capacitors/twoplates.html

Question --- The above text makes it sound like capacitor dielectrics have pre-existing (bulk) domains, like a ferromagnet, that are reoriented by the applied E field. Is this true, or does the dipole reorientation occur at the level of individual atoms or molecules?

How material increases capacitance

A key question is

how, at the atomic or molecular level, does running the E field through

a material increase the energy stored in the capacitor. Normally in an

atom (or molecule) the positive charge of the nucleus is completely balanced

by the surrounding electron cloud. It must be (it seems to me) that the

E field, which pushes on positive charge and pulls on negative charge,

must (in some sense) 'separate' the opposite charges of all the dialectic

atoms (or molecules). In practice this probably means all the electron

clouds move a little. It takes work (and time) to separate the charges

so this is where the extra energy goes when the capacitor is charged. This

is reversible operation. The energy is returned when the charges return

to their normal equilibrium positions.

If what is

happening is reorientation (rotating) the dipoles of molecules, I think

the same argument applies about the work required and it being reversible.

This picture of partial cancellation in an electron's external E field seems a lot like the partial cancellation of the E field in a capacitor with a dielectric material! In both cases the applied E field is doing work to separate (move) a matrix of positive and negative charges. Light slows down (from infinity?) in a vacuum because the E field has to do work on the virtual particle charges as it propagates. It's seems directly analagous with the added slow down of light moving in a material due to the fact that it has to move more charge.

Is this not the explanation of where e0 (electric permittivity) of the vacuum, or alternately the capacitance of the vacuum, comes from? I bet it's directly calculable from the vacuum properties.

Do vacuum properties set the speed of light?

--

(from my email to Ken Bigelow 1/07)

Ken

Have you ever

looked into why the speed of light is 3 x 10^8 m/sec?

Quantum physics says the vacuum is full of virtual charged particles. Particle physicists argue the measured charge of the electron is lower than the so-called 'naked' or 'bare' electron because the electron is always surrounded by a cloud of virtual particles (Ref: 2006 book Dark Cosmos by particle physicist Dan Hooper). The charge is lowered because the virtual cloud is polarized, the E field of the electron pulling the + charges closer and pushing the negative charges away. There is data to support this view. Probes of the electron at high energy measure the fine structure constant (e^2/(c x h) higher by 7% (Ref: NIST). The interpretation is that the probe particles are partially penetrating the virtual cloud around the electron.

The polarization of virtual vacuum particles certainly seems a lot like the polarization of charged particles in dielectrics, which increase capacitance and slow down light in materials. So is the speed of light set by virtual vacuum particles, more specifically by the need to do reversible work on them as light propagates? It's an interesting speculation. I found a reference in the journal Nature about 16 years ago that advised particle physicists to seriously consider this idea.

Have

you looked into this? I can find little more. My guess is that it's

not calculable. Physicists tend to talk about only what they can calculate.

Don

*** In Oct 2007 nearly nine months after I wrote above, I found this recent apparent confirmation from a reputable source --- 2007 lecture notes from Univ of Ill physics course on electromagnetism. Note this appears to be presented as fact to students, not as speculation.

Key quote --- "The macroscopic, time-averaged electric permittivity of free space (e0 = 8.85 10^-12 F/m) is a direct consequence of the existence of these virtual particle antiparticle pairs at the microscopic level."

http://online.physics.uiuc.edu/courses/phys435/fall07/Lecture_Notes/P435_Lect_01_QA.pdf

"What is the physics origin of the 1/e0 dependence of Coulomb’s force law?

At the microscopic level, virtual photons exchanged between two electrically charged particles propagate through the vacuum – seemingly empty space. However, at the microscopic level, the vacuum is not empty – it is a very busy/frenetic environment – seething with virtual particle-antiparticle pairs that flit in and out of existence – many of these virtual particle-antiparticle pairs are electrically charged, such as virtual e+ e- , and muon+ muon-, tau+ tau- pairs {heavier cousins of the electron}, 6 types of quark-antiquark pairs qq and also W- W+ pairs (the electrically-charged W bosons are one of two mediators of the weak interaction), as allowed by the Heisenberg uncertainty principle (delta E delta t) > h bar. The macroscopic, time-averaged electric permittivity of free space (e0 = 8.85 10^-12 F/m) is a direct consequence of the existence of these virtual particle antiparticle pairs at the microscopic level." (from Univ of Ill physics link above)

Consider the following facts about e0, vacuum, and

speed of light

Undisputed

------------------------------

(all this is from a Barry Setterfield oddball paper,

25 pages, 87 references, 2002, about zero point energy)

(age 64 religious guy, creationist cosmologist!)

http://www.journaloftheoretics.com/Links/Papers/Setterfield.pdf

Really important to vet these references

from Setterfield

1 S.M. Barnett: Quantum electrodynamics - Photons faster than

light?; Nature 344, No. 6264 (1990) p 289

2 G. Barton: Faster-than-c light between parallel mirrors.

The Scharnhorst effect rederived; Physics Letters B, 237(1990) p 559-562.

3 K. Scharnhorst, Phys Lett.

B. 236 (1990), p.354.

3 G. Barton, K. Scharnhorst: QED between parallel mirrors: Light signals

faster than c, or amplified by the vacuum; Journal of Physics A: Mathematical

and General 26(1993)2037-2046.(Scharnhorst effect consists of the variation

of the speed of the light when this is submitted the confined spaces.)

4 J.I. Latorre, P. Pascual, R. Tarrach: Speed of

light in non-trivial vacua; Nuclear Physics B p 437(1995) .

useful Wikipedia on Scharnhorst (in Italian), but with English references

http://en.wikipedia.org/wiki/Scharnhorst_effect (english, no references)

------------------------------------------

stuff on interaction of electrons in orbit with vacuum

from same Setterfield paper

-- A paper published

in May 1987 shows how the problem may be resolved69. The Abstract summarizes:

“the

ground state of the hydrogen atom can be precisely defined as resulting

from a dynamic equilibrium between radiation emitted due to acceleration

of the electron in its ground state orbit and radiation absorbed from the

zero-point fluctuations of the background vacuum electromagnetic field…”

In other words, the electron can be considered as continually radiating

away its energy, but simultaneously absorbing a compensating amount of

energy from the ZPE sea in which the atom is immersed. 69 69. H. E. Puthoff,

Physical Review D, 35 (1987), p.3266.

70. T. H. Boyer, Phys. Rev. D 11 (1975), p.790

71. P. Claverie and S. Diner, in Localization and

delocalization in quantum chemistry,

Vol. II, p.395, O. Chalvet et al., eds, Reidel, Dorchrecht,

1976.

-- This

development was considered sufficiently important for New Scientist to

devote two articles to the topic72-73. The first of these was entitled

“Why atoms don’t collapse.” 72. Science Editor, New Scientist, July 1987.

73. H. E. Puthoff, New Scientist, 28 July 1990. pp.36-39.

----------------------------------------

above are Tid bits from peer review journals or reputable

sources

Conclusions (early 2007)

-- (as fas

as I can find out) the charge of 'bare' or 'naked' electron has never been

calculated.

-- (as fas

as I can find out) the speed of light in a vacuum has never been calculated

from the properties of a vacuum.

-- It seems

very likely (to me) that permittivity (e0) of the vacuum is caused by virtual

charge being polarized and that this mechanism is basically the same as

increase in permittivity caused by the polarized charges in dielectric

materials.

-- It seems

very likely (to me) that permittivity (e0) of the vacuum is caused by virtual

charge beeing polarized. is very similiar to the increase in permittivity

hrtr.

There is another

interesting possibility for breaking the light-barrier by an extension

of the Casimir effect. Light in normal empty space is " slowed"

by interactions with the unseen waves or particles with which the quantum

vacuum seethes. But within the energy-depleted region of a Casimir

cavity, light should travel slightly faster because there are fewer obstacles.

A few years ago, K. Scharnhorst of the Alexander von Humboldt University

in Berlin published calculations4 showing that, under the right conditions,

light can be induced to break the usual light-speed barrier. (Encyclopedia

of Astrobiology, Astronomy, & Spaceflight -- David Darling)

Scharnhorst,

K. Physics Letters B236: 354 (1990).

In 2002, physicists

Alain Haché and Louis Poirier made history by sending pulses at

a group velocity of three times light speed over a long distance for the

first time, transmitted through a 120-metre cable made from a coaxial photonic

crystal.[1]^

Electrical pulses break light speed record, physicsweb,

22 January 2002; see also A Haché and L Poirier (2002), Appl. Phys.

Lett. v.80 p.518.

(very interesting) classic oddball paper

http://www.ldolphin.org/setterfield/redshift.html

Is this what sets the speed of light?

(this section

is I write as pure speculation in early 2007 --- I have seen no references

on this as I write)

We know the speed of light is determined (only) by the vacuum parameters e0 and u0 {c = 1/sqrt(e0 x u0)} If there is a virtual particle cloud explanation for u0 comparable to the explanation for e0 (and I bet there is), then cannot e0 & u0, and hence the speed of light, be calculated from plank virtual properties of the vacuum.

In other words have we not (in a sense) figured out why the speed of light in a vacuum is 3 x 10^8 m/sec?

Does not light slow in materials for the same reason?

(see above

for Univ of Ill material)

So is this

not also the explanation of why (at the atomic level) light travels slower

in materials? It takes extra time (assuming power is not infinite)

for the E field to move the electron clouds in the atoms (or molecules)

of the material over and then back as the light wave pass?

Note, above seems to me a much more straightforward (classical/circuit) explanation for the slow down of light than an 'explanation' I sometime see from physics types. They sometimes say the slow down of light is due to photons being absorbed and then remitted by the atoms of the material. However, physics types seem to assume that photon absorption and remission in many other cases, like mirrors, occurs instantaneously. Or is there really a delay with real mirrors, and it's just that most discussions assume ideal mirrors, in the same way an electrical engineer often assumes ideal, lossless conductors?

Deriving the equation of signal velocity in a

cable

Consider an

(idealized) cable that consists of two (metal) cylinders one inside the

other. The two cylinders act like the two wires in lamp cord, meaning the

current that travels down one cylinder returns via the other cylinder.

To keep the equations very simple we assume the inside and outside cylinder

are close to the same size with only a small gap between them. With this

assumption we can treat the the gap between cyclinders like it's an ideal

parallel plate capacitor (just bent around) with a uniform E field across

the gap.

Assign

h = small segment length of a long cable (say 1 ft)

r = radius (to center of gap)

d = gap (between cylinders)

From circuit analysis of LC model of the cable we know the speed it takes for a signal to go (say) 1 ft down a cable is just the time constant of the circuit model for 1 ft, which is tau = sq rt {LC}. Generalizing to cable segment length (h) the velocity of travel down the cable is

vel = distance/time = l/sq rt (LC)

where

L = inductance for length h

C = capacitance for length h

The E (or D) field is all between the cyclinders, and

it's direction is radial, arrows pointing from one cylinder to the other

cylinder. Equation for an ideal parallel plate capacitor is C = e x A/d.

To get the area we just unwrap (lengthwise) 1 ft of the cable to make a

parallel plate capacitor with area (2 pi r) and gap (d).

C = e x area/d

C = e x (2 pi r) x h/d

or = e x h x (2 pi r)/d

The H (or B) field is all between the cyclinders,

and looking at the cable end on it's direction is circular, arrows wrapping

around the axis of the cable. The general equation of inductance is L x

i = N x flux. Here N =1 and flux is (u x H) x area. If we cut open lengthwise

a cable segment, we can see the flux (H or B) flows is the area (h x d)

formed by cable segment length (h) and gap between cylinders (d).

L = flux/i

= u x H x area/i

= u x H x (h x d)/i

From Maxwell's equation we use Ampere's law (H x length

= N x i) where the path follows H (or B) around the cylinders. The (average)

radius is (r) so the path length is (2 pi r) and (H x length = N x i) simplifies

to

H x (2 pi r) = i

H = i/(2 pi r)

Substituring H into the equation for L we get

L = u x H x (h x d)/i

= (u x h x d /i) x i/(2 pi r)

L = u x h x d/(2 pi r)

Finally the velocity of a signal down the cable is just

length of cable segment (h) divided by the propagation time for the segment

tau = sq rt {LC}, where L and C are the inductance and capacitance per

length segment (h) of the cable

velocity = distance/time = h/sq rt {LC}

= h/sq rt {u x h x [d/(2 pi r)] x e x h x (2 pi r)/d}

= h/sq rt {u x e x h^2}

= h/ h x sq rt {u x e}

= 1/ sq rt {u x e}

Note the equation we have calculated for the speed of travel of electrical signals down a cable is exactly the same as the general equation for the speed of of light in materials!

velocity = 1/sq rt {e x u}

(in cable and materials)

c = 1/sq rt {e0 x u0} (in vacuum)

Calculating energy in E and H fields

First we need

to calculate the impedance (Z = ratio of volt/amp) of the cable. Interestingly

the impedance of cables like this (technically known as transmission

lines) even though composed of reactive elements (L,C) is in real ohms,

just like a resistor.

Z = v/i = sq rt (Z^2) = sq rt (Lw/Cw)

= sq rt{(u x h x d/(2 pi r}/ e x (2 pi r) x h/d

= sq rt{(u x x d^2)/(2 pi r)^2 e )}

= d/(2 pi r)sq rt (u/e)

Note Z of free space is known to be

Z free space = sq rt (u0/e0)

= sq rt (4 pi x 10^-7/8.8 x 10^-12)

= 377 ohms

The cable Z came out to be {d/(2 pi r) x sq rt (u/e). This is just Z (in materials) scaled by the geometric constraints of the cable on the length of the E and H vectors, specifically the ratio of the length of the E vector (d) to the length of the H vector (2 pi r) . What this means (almost for sure) is that the E and H fields of light in free space are the same size (E and H vectors are the same length).

Energy in the

electic field = 1/2 x C v^2 = 1/2 x e x E^2 x volume

= 1/2 x {e x (2 pi r) x h/d} x v^2

Energy in the

magnetic field = 1/2 x L i^2 = 1/2 x u x H^2 x volume

= 1/2 x {u x h x d/(2 pi r)} x i^2

substituting v = i x Z in the electric energy formula

= 1/2 x {e x (2 pi r) x h/d} x v^2 (electric energy)

= 1/2 x {e x (2 pi r) x h/d} x i^2 x (d/(2 pi r))^2 x (u/e)

= 1/2 x {h} x i^2 x (d/(2 pi r)) x (u)

= 1/2 x {u x h x d/(2 pi r)} x i^2 (magnetic energy)

hence half the total energy is stored in the electric field and half in the magnetic field

How E and H fields 'fly' down a cable

Seems

to me a good starting point to understand how light travels in space is

to understand (in detail) how voltage transitions (steps & ramps) travel

down cables. It's not too much of a stretch to picture a light beam in

space as having fields (something) like those in a cable, in other words

thinking of light as traveling in an invisible (straight) cable, or light

pipe, in space. Light is known to be a transverse wave, meaning the

E and H fields point at right angles to the direction of travel, and the

E and H fields are at right angles to each other too. This is how the fields

in the cable point. The E field points radially out (or in) and the H field

goes around the cable.

Of course, E and H fields of light (photons) in space don't have conductors limiting the extent of their fields spacially, so light photons must (in some way) have a spacial field limit based on their energy, which (I think) is equivalent to saying based on the time rate of change of the source and of the fields at a specific point in space.

The cylinderical geometry of the fields is, of course, forced by the geometry of the conductors of the cable. The common solutions of Maxwell's equations for light in free space are a linear polarized and circularly polarized waveforms. (I have read that there is also a cylindrical solution, but I have not found it.) Each local region of the cable, however, does appears to be a good analog for linearly polarized light in free space, because the the E and H fields in a local region are fixed in orientaion, crossed, and transverse to the direction of travel.

Another issue is the how the E and H fields are synchronized in time. The field equations of light show the E and H are in time phase, meaning they increase (and decrease) at the same time. When I look at the phase relationship in the LC ladder model of a cable I don't get a simple answer. The voltage across each capacitor is the local E field, that's clear. However, the two inductors that connect to each capacitor have different currents (with different phases) in them, so which inductor current sets the local H field? Here is some detail:

The H field (via right hand rule) depends on the current in the segment. Spice simulation shows the current divides and flows in two or more caps at the same time, so there must be higher current in the local segment inductor than in the cap. The cable load on each segment looks resistive (Z = sq rt (L/C)) (known to be the case in transmission lines and confirmed by simulation), so the the current in the local segment inductor has two components. One is the current in the segment cap, which is proportional to the derivative of the voltage (i = C dv/dt). The other component is the load current into the rest of the cable, which is in-phase with the voltage (i = v/Z = v/sq rt (LC))). The load current, of course, is the current flowing in the inductor of the next segment inductor.

The E field is the segment capacitor voltage divided by the distance (E =v/d). The E field and H field, as defined by the current in the next segment, are both in-phase because they are both in phase with the segment voltage. However, the current in this segment's inductor also includes the local capacitor current, so it differs (somewhat ) in phase and magnitude from the next segment inductor, hence the ambiguity.** I think I see how to resolve the ambiguity. The ladder can be made finer and finer by taking the capacitance and inductance per ft, then per inch, then per mil, etc. As resolution gets finer, L and C both get smaller (in proportion) with the ratio of L/C staying constant. The difference current in the two adjacent segment inductors is just the current in the capacitor, but as the ladder gets finer the impedance of each cap goes up (Z cap = 1 / C x pi freq) so the capacitor current goes down. The current in the next segment inductor (v/sq rt (L/C)) is unchanged because the ratio of L to C is unchanged. So in the limit of a fine resolution ladder the current in a segment inductor approaches the current in the next segment inductor. The ambiguity vanishes.

Conclusion --- LC model of a cable shows the E field (capacitor voltage) and H field (inductor currents) are in phase.

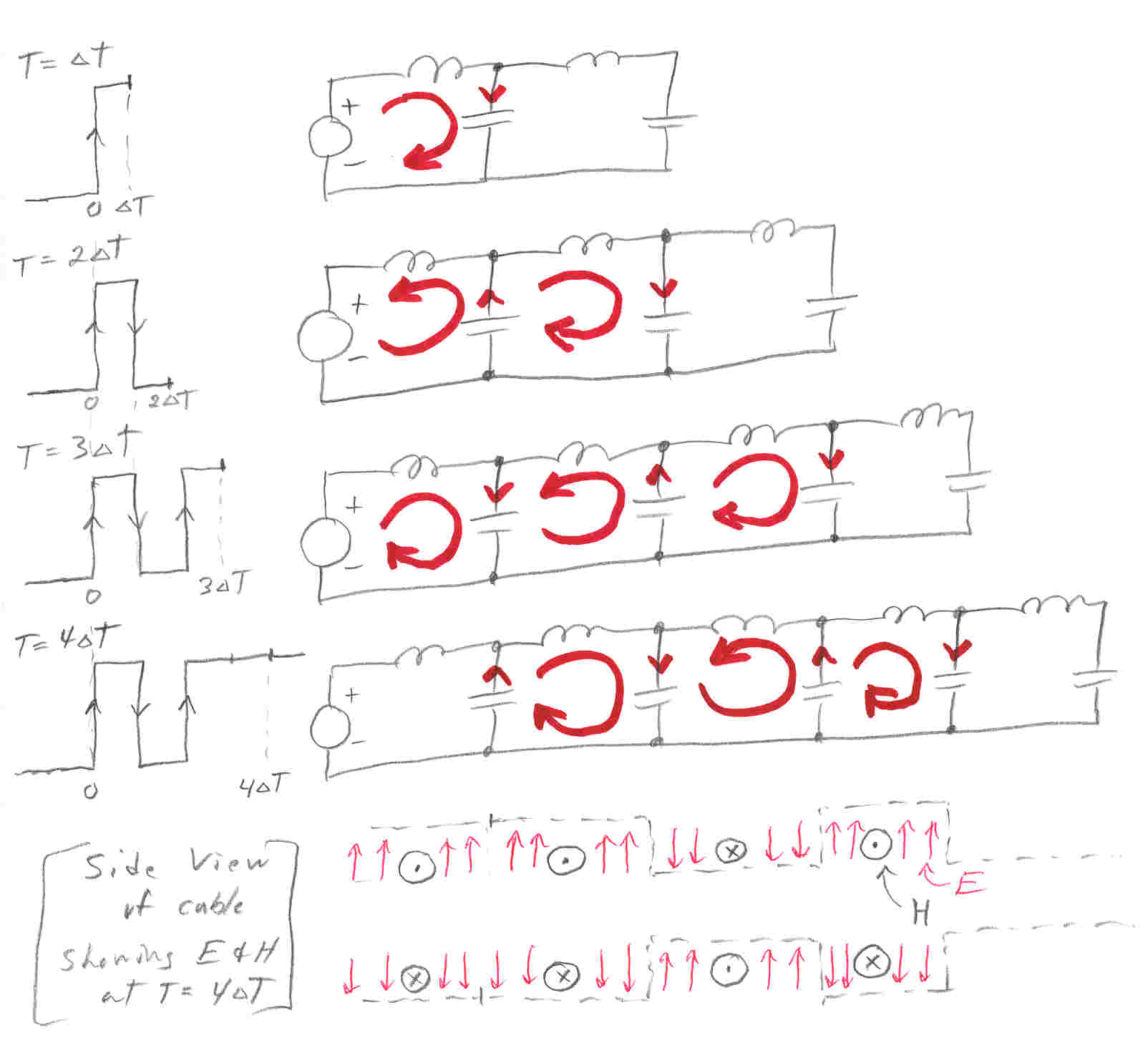

My sketches below show the details of a voltage step (0V to 100V instantaneous) flying down a cable (at about 1/2 the speed of light). For a step of 100V as shown the E field (radial) and H field (circular) in the cable after the edge flys by is totally static. In other words there is (effectively) no frequency here. This is a quasi-static case.

Below shows a burst (three transitions) propagating down a cable. Note the currents in the LC ladder and directions of the E and H fields reverse at each transition. I don't think there is any frequency limit (in principle) for an ideal lossless cable. So if 'delta t' =6.66 x 10^-16 sec, which is half the period of a cycle of blue light, is this (like) the cable analog of a blue light photon?

Feynman's simple model of radiation

In

Feynman's famous books Lectures on Physics (Vol II, pages 18-5 to

18-9) he shows how to generate a single rectangular pulse of radiation.

This is done by starting with two, superimposed, infinite planes of charge

(one positive, one negative) that are not moving. Initially there is no

E or H anywhere outside the planes. One plane is them moved (downward)

a little at constant speed and then stopped. Current, being the rate of

change of charge, steps up from zero to a constant value (positive) while

the charge is moving and then steps back to zero when the charge stops

moving.

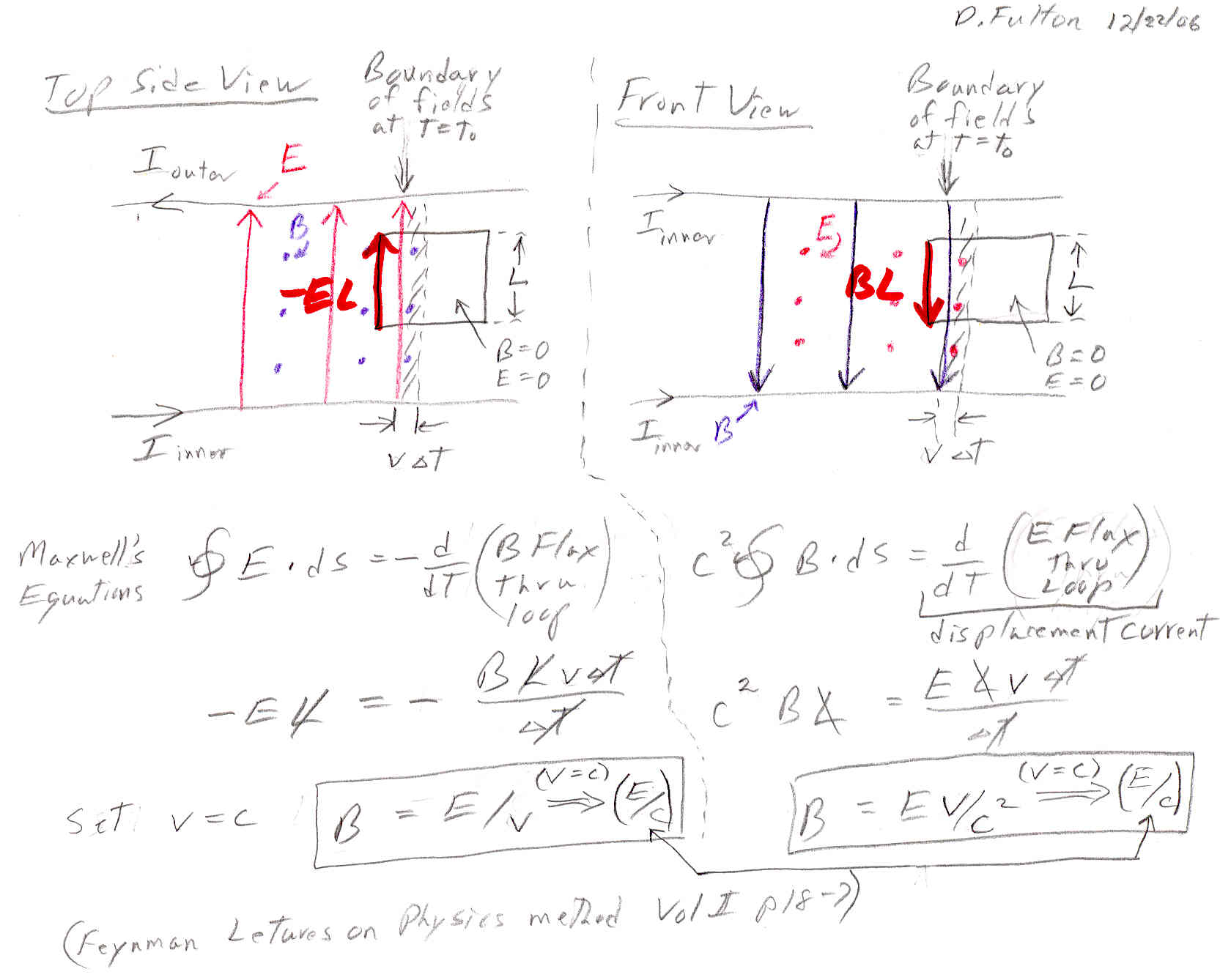

Feynman shows that a thin plane of spacial quadrature, time in-phase, E,H radiation fields fly out at velocity c. E points opposite the direction of the motion and H is in quadrature such that (E cross H), the poynting vector, points in the direction of propagation. These fields have no sinewaves, E and H simultaneously step up to a fixed value and then back to zero. He shows this solution is compatible with Maxwell's equations by drawing two rectangular loops in spacial quadrature at the leading boundary of the fields and applying the two Maxwell line integral equations (see below). Since the loops do not include the plane, there is no current through the loops, so J=0.

Line integral of E ds = - d/dt (flux of B thru loop)

c^2 x Line integral of B ds = J/e0 + d/dt (flux of E thru loop)

Feynman's text here says --- The fields have 'taken off, they are propagating freely through space, no longer connected in any way with the source. .. How can this bundle of electric and magnetic fields maintain itself? The answer is by the combined effects of the Faraday's law (#1 above) and the new term of Maxwell (#2 above). ... They maintain themselves in a kind of a dance, one making the other, the second making the first, propagating onward through space.Notice the language "one making the other, the second making the first" is a little vague about the time sequence. Do the fields create each other sequentially or simultaneously? Maybe for times set by uncertainty principle (plank constant divided by energy) it can be said that the fields make each other in turn, but from the analysis it seems fair to say that the changing E and B are simultaneously creating each other.

More on Feynman's model -- another proof E and H are

in time phase --(my email to Ken Bigelow 1/07)

Ken

I was looking at my Feynman Lectures on Physics (Vol II, 18-4) and found

he introduces radiated electromagnetic fields with a very simple example.

The source is contrived (a superimposed infinite sheet of +charge and –charge,

one of which he moves a little at a constant speed and then stops), but

the beauty of this example is that the the radiated fields that fly off

the charge planes are very simple. As the field 'pulse' passes a point

in space, the E and H step up from zero to a fixed value and then back

down to zero again. He draws two rectangular loops in spacial quadrature

at the leading edge of the fields and shows that you can calculate E and

H (almost by inspection) and that this result satisfies Maxwell's integral

equations.

In Feynman's example there are no sinewaves, just two steps, so there is no way E and H can be in time quadrature. The traveling “little piece of field” (Feynman's words) is the superposition of two field steps created when the charge sheet starts and stops moving. Application of Maxwell's equations seems to show E and H are simultaneously creating each other. Feynman in his text is a little vague about exactly how the fields create each other, saying “by a perpetual interplay – by swishing back and forth from one field the other – they must go on forever ... They maintain themselves in a kind of a dance -- one making the other, the second making the first --- propagating onward through space.” (Maybe it could be argued they sequentially create each other down near plank time by application of the uncertainty principle.)

The same analysis can be applied, with the same result (E and H step simultaneously),

to local regions of the ideal cylindrical cable that I have been studying.

Don

Applying Feynman's analysis applied to the cable

Feynman's analysis

can be applied to show the spacial quadrature, time in-phase fields inside

an ideal cable are consistent with Maxwell's equations. Since the amplitude

of the fields is constant, the changing flux within the loops is just the

new flux added in time (delta t) as the fields propagate. The right side

of the loop is in the volume where the fields have not yet reached, so

only the left vertical leg of each rectangular loop has a non-zero value.

The analysis shows that the fields generated by d/dt of the other field's flux are only consistent, and compatible with sustained propagation, when the (velocity of propagation) = c. The analysis also shows that the magnetic field is (always) equal to the electric field divided by the speed of light (B=E/c).

The criteria that (B=E/c) means that energy stored in the magnetic field (in both radiated fields and the cable fields) is equal to the energy stored in the electric field (see below).

Drawing a fundamental conclusion about what sets the

speed of light

We have seen

above that when Maxwell's equations are written using c rather than u0,

B and u0 come out to be secondary. Maxwell's equations force the value

of B to be slaved to E such that the energy stored in both fields

is equal. If propagation is in a material such that capacitance and e are

higher, then Maxwell forces B to be higher too such that the energy balance

between electric and magnetic fields is maintained. So even though c =

1/sqrt (e x u), the conclusion I draw is that is is really the electric

permittivity (e) that sets the speed of light:

e (or e0) alone sets the speed of lightCable E and H fields ---another perspective (Nov 15, 06 reply email to Ken Bigelow)

On transmission lines --

The transmission line I have been thinking about is a simple, ideal, cylindrical type (one cylinder inside another cylinder) that is lossless (no R, no G) and can be modeled by a (long) L,C ladder. While it seems counter-intuitive that only reactive components (L & C) can produce a real impedance, this is the case. The reason is that a transmission line is a distributed system, not a lumped system. Look at your equation for Z, which is correct, the j's cancel, Z is real ohms.

An ideal, lossless

transmission line loaded (terminated) with a resistor R where R=sqrt(L/C)

has no reflection from the end. This is the only terminating impedance

that produces no reflection. The proves that the impedance looking into

each LC segment is a real impedance of Z ohms. This fact is in every text

book on transmission lines. And you can find it at this Wikipedia link

too (set ZL=Z0 in their input impedance formula)

http://en.wikipedia.org/wiki/Transmission_line

From the LC ladder here is the argument that E and H in the cable are in phase. Consider the voltage on a capacitor. The E field in the capacitor having units of volts/meter is of course 'in phase' with the capacitor voltage. In the LC ladder there are two inductors connected to each cap, let's call them the input L (toward front) and output L (toward rear). The current in the output L is just the current to the rest of the cable and based on the fact that the impedance of the cable is real (Z ohms) the current in this inductor is also 'in phase' with the capacitor voltage (iout = Vcap/Z).

Now, I was at first troubled by the fact that the current in the input inductor cannot be in phase with the capacitor voltage. The reason is that the current in the input inductor is the (vector) sum of two currents: the output inductor current, which is in-phase with the capacitor voltage, and the capacitor current, which of course leads the capacitor voltage by 90 degrees.

But consider what happens as the LC ladder is made finer and finer with more and smaller LC elements. The finer the LC model the better it represents the distributed L and C of the cable. The current in the output inductor being controlled by a ratio of impedances (v/sqrt(L/C)) is unchanged as L and C get smaller. On the other hand the current in the capacitor approaches zero as the value of each C approaches zero (icap = C dv/dt). So in the limit of a fine LC model, the current in the input inductor approaches the current in the output inductor.

Bottom line --- In the LC model each capacitor represents the local E field (E =v/gap) and the current in the two connecting inductors represent the local H field (H = i/(2 pi r) = (v/Z) x (1/(2 pi r)). Hence the local E and H fields are in phase with the local capacitor voltage and with each other.

This means that if the cable is driven with a voltage ramp (i. e. a voltage that linearly increases with time), as the fields fly down the cable (at 3 x 10^8 m/sec if the gap is air) at each point in the cable the local E (radial) and H (circular) in the gap between the cylinders increase in amplitude together at the same rate. And, it's important to note, the time rate of increase of the E and H field strengths in the cable at any point depends only on the time rate of increase of the external driving voltge. (last sentence is a further thought that was not in the email.)

That's

enough for this email.

Don

footnote (post email)--- Do cable E & H fields have anything to do with photons? In other words is there any way the fields in the cable can be considered photons, or do photons 'arise' only from free unrestrained traveling E and H fields?

Wild, interesting thoughts of physics poster

'photonquark' on photons

A wild physics

poster named photonquark (retired electronic designer!) says this:

We must think of a photon with respect to the size of the observer and the size of the photon. If we see the photon as a small particle, then the fields must be considered in a circular geometry, and if we draw a picture that accurately represents these fields, they will have to have some curvature in them, but if we take any small elemental volume of the fields in the photon, we will have a picture of the orthogonal electric and magnetic field lines that we are used to thinking about. for plane waves.

This is interesting because it applies to the cable fields above. Photoquark concludes that a photon is a sphere of fields with a dia of the wavelength. (He also says E and H are in time quadrature!). More from photonquark

In text books this plane wave is graphed as a sine wave of electric field amplitude, and a cosine wave of magnetic field amplitude, with the sinusoidal waveforms offset by 90 degrees. (really!) Notice that there is no location on this energy wave graph where the photon energy is zero. The sum of the electric and magnetic energy, at any and every instant and location of the wave graph is finite, and never zero. All the energy of the photon is contained within one full cycle of oscillation.

http://forum.physorg.com/index.php?showtopic=1030&st=0

Electromagnetic wave propagation

The

cylindrical cable analyzed is a special case of what is called a waveguide.

The

metal of a waveguide put boundary conditions on the fields (E fields must

enter metal perpendicular). An EM wave that propagatges with no electric

nor magnetic field in the direction of propagation is known as a TEM

(Transverse ElectroMagnetic) wave.

Cylinderical cable reference (confirms H is circular)

http://solar.fc.ul.pt/lafspapers/coaxial.pdf

1/2 C v^2 = 1/2 L i^2 where v and i are at some point in the cable

Note on time relationship of E and H

The 'wrong'

personal site below by Ken Bigelow (came up first in a Google Photon search)

has many pages on light and photons at a low to moderate technical level.

It argues that E and H fields of a light photon, which Ken admits are usually

shown as in-phase, should be in time quadrature (meaning sine and cosine

with a 90 degrees phase difference). The 'right' site is a very nice Power

Point presentation on light from Georgia Tech at an advanced technical

level. Even if you cannot follow the mathematics (div and curl), it's worth

looking at because many characteristics of light are derived and plotted.

A 90 degree time phase difference seems intuitively reasonable because Maxwell's equations show that changing E fields make H fields and vice versa, so how can they both increase and decrease at the same time? Isn't more likely that E creates H, then H creates E, and so on? You often see this simplified explanation put forward for light, it's like a cat chasing its tail. This always made sense to me, and I will admit I was surprised when I found light equations (online) that showed E and H to be in time phase.

Ken's site also makes the argument that E and H in time phase is unreasonable because for an instant twice per cycle both fields will be exactly zero, so Ken asks, "At this time where is the energy?". His solution to this 'problem' is put the E and H fields in time quadrature. Energy stored in a field depends on the field magnitude squared, and there is a trigonometric identity (sine^2 + cosine^2 =1), so with E and H in time quadrature, Ken argues, the energy of a photon is constant. This (naively) seems a strong argument.

However, Ken is wrong. Firstly, it's almost inconceivable that a solution to the light wave equation, first derived 150 years ago by Maxwell, showing that E and H are in time phase could be wrong. What Ken is missing, I think, is an understanding of the frequency of the photon. Its frequency, which can vary over many, many orders of magnitude, depends only on the source of the light and is totally unrelated to the speed of the photon.

The 'zero energy' of photon argument is most easily addressed by considering photons at radio wave frequencies. After all we understand how these photons are generated, they come off of radio antennas. The reason there appears to be zero energy in the fields of a photon at null points in the cycle is because at those times there really is zero energy. And why is that? It is simply because the antenna sends out no energy periodically, twice each cycle of the radio frequency when the AC voltage driving the antenna goes through zero.

It's not unlike 60 hz, single phase, AC power in your home. At null points in the line voltage (every 8.33 msec) you are receiving no power from the power plant. That's why a fast light source like a florescent bulb flickers 120 times per second. Modern electronics that need constant power have large energy storage capacitors in them that provide 'ride-thru' power for a few msec as the line voltage crosses through zero.

wrong http://www.play-hookey.com/optics/transverse_electromagnetic_wave.html

right http://www.physics.gatech.edu/gcuo/

lectures/3803/OpticsI03Maxwell_sEqns.ppt

What's a photon of light?

Photon is

the name given to a specific 'chunk' of light energy that acts (in some

ways) like a particle. For example, a single photon is able to eject an

electron out of atoms such that it can be collected and counted (photoelectric

effect).

Here's one definition --- Where an electron in an atom orbiting the nucleus 'jumps' from a higher orbit at atomic energy level (E2) to a lower orbit at energy level (E1), a photon is emitted (by the electron) that carries away the difference in energy. The relation between the photon energy and its frequency is usually given in the textbooks this way:

freq = (E2-E1)/h

where

h = 6.66 x 10^-34 joule-sec (Planck's constant)

While frequency is easy to define for a repetitive waveform, I find it a somewhat slippery concept when applied to a single photon, especially considering that the quantum physics types are unable to describe its waveform. Hey guys, is a photon like one cycle or not? Apparently quantum physics is silent on this issue, photons are in some sense unknowable.

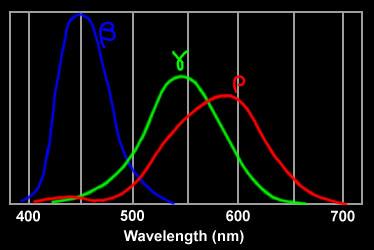

Visible light is composed of photons in the energy range of around 1.7 to 3 eV. Photon energy is inversely proportioal to wavelength (proportional to frequency). Blue light with a wavelength of 400 nm has energy of 3.1 ev. A red photon with 700 nm wavelength has an energy of (400 nm/700 nm) x 3.1ev = 1.77 ev.

Check

E = h x freq = h x c/wavelength

= 6.66 x 10^-34 x 3 x 10^8/400 x 10^-9

= 0.05 x 10^-17

= 5 x 10^-19 joule

(1 ev = 1 volt x 1.6 x 10^-19 coulomb)