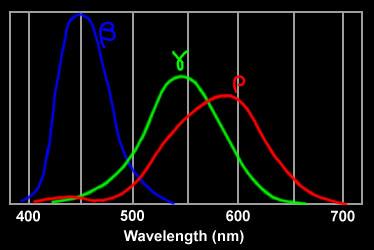

three types of cone cells' spectral response

How does the eye see color?

The physicists tell

us light comes into the eye in the form of discrete, independent, packets

of energy called photons. Feynman in a 1979 video lecture (from Auckland

NZ) on photons said the eye can detect as few as five photons. (Experiments

show human rod cells may be able to detect a single photon.) The eye must

somehow 'assign' color to the photons it detects. If not to individual

photons, then to groups of photons arriving close together in time and

position on the retina. And, as a further complication, we can obviously

see many, many subtle shades of color. So how does the eye do this?

The eye might (theoretically) be able to sense directly the frequency and/or energy of light photons it receives. But to sense lots of slightly different colors this way very likely means the eye would need many, many different photosensitive molecules with a range of energy levels making this very unlikely.

But there is a simpler was to sense color. This is the method used in color film and in digital cameras, and it is used by the eye too. The light is measured three times (obviously not each photon, but statistically) by broadband detectors each optimized for one of three primary colors (R, R, B). In a digital camera the surface of the CCD detector has an array of primary color (R, G and B) filters above identical light (intensity) detectors. By knowing how strongly the R, G, and B optimized detectors respond, the color of the light is determined.

In the eye this is done by three types of cone cells. Each type of cone cell is sensitive to a fairly wide range of photon frequencies (energy) with one type most sensitive to short wavelengths (blue), one medium wavelengths (green), and one long wavelengths (red). See below. Note that shape and gain of these curves must be quite predictable and stable for the brain to correctly interpret the ratio of the three cone cell outputs it gets as color. It (apparently) takes a thousand or more photons to get an output from a cone cell.

three types of cone cells' spectral response

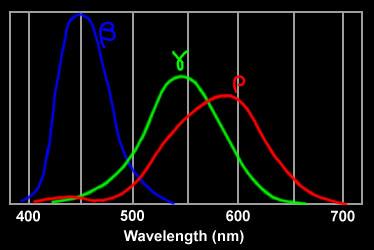

The eye also contains (lots of) much more sensitive cells called rod cells. These are the cells that can be triggered by 1 to 5 photons. These cells are used when light is dim. Since there is only one type of rod cell and its response is quite wideband (see below), there is no way to figure the color of these photons. In effect rod cells act as photon counters with the light intensity being proportional to the photon count. {To get a (normal) image the photon count needs to be scaled by a non-linear curve called the gamma curve, but we won't go into that here. You can read about this in references of digital camera CCD's.}

rod cells' spectral response

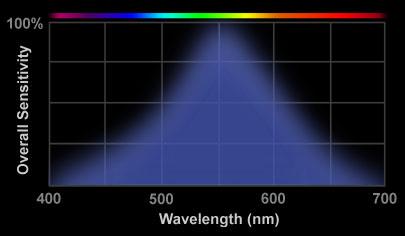

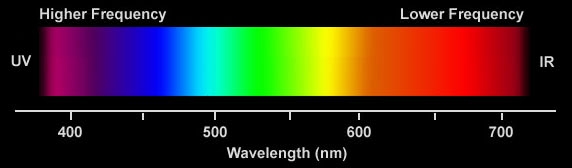

color and wavelength --- visible spectrum is about

an octave (380 nm to 750 nm)

Rods and cones both sense light with retinal, a pigment molecule, embedded in (different) proteins. A photon hitting reinal causes an electron to jump between energy levels created by a series of alternating single and double bonds in the molecule causing a twisting of the molecule. The change in geometry initiates a series of events that eventually cause electrical impulses to be sent to the brain along the optic nerve.

Rods are incredibly efficient photoreceptors. Rods amplify the retinal changes in two steps (with a gain of 100 each for a total gain of 10,000) allowing rods (under optimal conditions) be triggered by individual photons. Cones are one thousand times less sensitive than rods, so presumably it takes a minimum of 1,000 photons to trigger a cone.

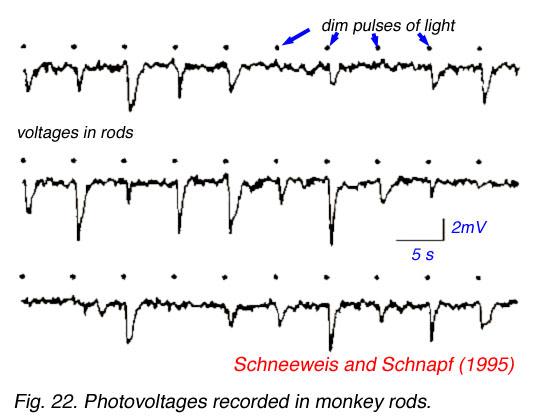

Here is measured voltages in rods excited by repeated very dim light flashes ('dots'). While the data is a little noisy (as all biological data is), it looks like there are (basically) three different size outputs: no response (zero amplitude pulse), a small pulse, and a big pulse. The interpretation of the data (by the experimenters) is that the response is quantized and proportional because the rod is detecting 0, 1 or 2 photons (in a flash). Well maybe. It sure would be nice to compare the 'averaged' response for each category and maybe see a few triple pulses too.

rod cells in dim light --- no pulse ( 0 photon), small

pulse (1 photon), large pulse (2 photons)

Retinal in solution when exposed to light changes to different isomers depending on the wavelength. It is found that the spectral response of retinal in solution is the same as the spectral response of the eye, so it must be (mostly) retinal that sets the wide spectral response of cells of the eye.

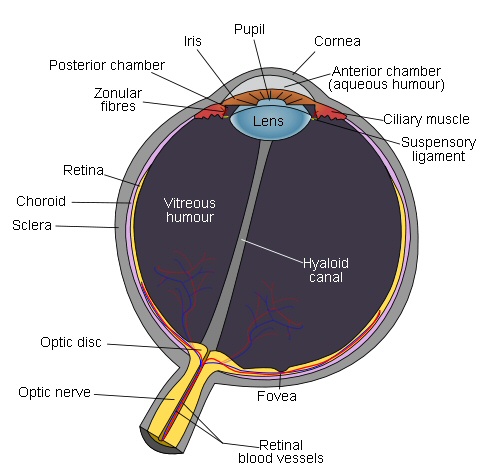

Cone vision is much sharper than rod vision. It's a resolution issue related to how the cells are 'wired' to the brain. Each cone cell connects to a different optiic nerve fiber, so the brain is able to precisely determine the location of the visual stimulus. Rod cells, however, may share a nerve fiber with as many as 10,000 other rod cells.

In resolution terms 7 million cones cells translates to 7 mpixels (or maybe 2.33 mpixel triads) equivalent to a digital camera. If the 120 million cones are wired in, say, 1k bundles, then rod resolution would be 1.2 x 10^8/10^3 = 120,000 pixels, which is pretty low.

Good eye links:

http://photo.net/photo/edscott/vis00010.htm

http://www.chemistry.wustl.edu/~edudev/LabTutorials/Vision/Vision.html

How photons are sensed by retinal in the eye

Retinal

is a relatively simple molecule with about 35 atoms (total) of hydrogen

and carbon that is the light 'detector' in rod cells and all three types

of cone cells. One particular double bond of retinal can ' break' (in 0.2

picosec) if an electron in the bond absorbs a visible photon of light and

is excited. With the bond broken the end of the molecule rotates (in nsec).

This shape change affects the protein to which retinal is attached and

begins a cascade leading to the firing of a nerve impulse to the visual

cortex of the brain. While retinal is the light sensitive molecule in both

rods and cones, the proteins are different, the cascades are different

and the gains are different in the different cell types.

Two eye photon puzzles

I was unable

to discover an explanation of two key issues related to photon sensing

by the eye.

a) What is the mechanism that widens the photon frequency absorption spectrum? How is the curve so stable and predictable?

b) How is it that 1,000+ photons are needed to fire a cone cell. Does it require that 1,000+ molecules of retinal in a cell be activated? Over what time interval must the 1,000+ photons arrive?

Broad frequency response puzzle

(update 4/09 -- see below for Prof Nathan's email that

explains the shape of the spectral response)

Not only is

the photon absorption frequency range of eye cells very wide, it is very

important to note that the spectral response curves of the three types

of cone cells must be both stable and predictable, othewise

the brain would not be able to accurately determine color, which is determined

from ratioing the outputs of the three different cone cells. If

the cone spectral response curves weren't stable, we would see the same

object at different times as being a different color. If the cone spectral

response curves weren't predictable, i.e. they varied from person to person,

different people would see the same object as being a different color.

The basic puzzle (to me) is that the frequency spectra of atoms and molecules, both emission and absorption, due to an electron changing orbit is usually shown as a collection of seemly randomly spaced very narrow lines, not at all like the wide, smooth absorption spectra of eye cells Why is the eye absorption so different? Is it a thermal effect? Are there other examples of wide spectra?

Thermal energy makes wide, continuous spectra. When electrons vibrate thermally, they emit a wide spectrum of frequencies that depend on the temperature. There is an ideal model of this type of wideband, thermal radiation called black body radiation. It has specific calculable, energy vs frequency curve that varies with temperature and is the same for all materials.

Spectrum of an incandescent bulb in a typical flashlight

(4,600K)

The sun is (very close to) a black body with a surface temperature of 5,780 degrees kelvin. A prism, by it ability to spread sunlight into a rainbow spectrum, neatly demonstrates that the atoms near the surface of the sun (likely hydrogen and helium) are emitting a wide spectrum of frequencies. A hydrogen atom has both continuous and discrete spectrum; the continuous part represents the ionized atom.

Are the atoms on or near the surface of the sun that emit visible sunlight ionized? A NASA reference on the sun says this: "much of the sun's surface (about 6,000 degrees) consists of a gas of single atoms", however, both the inside of the sun and its corona are (largely) ionized. Since the sun is more than 90% hydrogen, most of the inside the sun is a plasma of independent protons and electrons. The corona of the sun reaches millions of degrees.

Ideas/thoughts

a) It's a thermal/heat effect --- The narrow spectra shown are often astonomical

and when measured in the lab the gas density is very low.

b) It's somehow related to the nature of the double bond and/or stress

in the bond, perhaps modulated somehow by the protein to which it is attached?

c) Intermediate molecules exist with sensitivity peaks at different frequencies.

Con -- these intermicate peaks are shown as wide too.

d) Maybe there are a lot of slightly different variants of the light sensitive

molecule in each cell, so it's a statistical thing? The broad curves

is really a mixture of narrow curves. Con -- not even a hint of this in

references.

a) seems most likely --- Here is Wikipedia on emission spectrum ---- When the electrons in the element are excited, they jump to higher energy levels. As the electrons fall back down, and leave the excited state, energy is re-emitted, the wavelength of which refers to the discrete lines of the emission spectrum. Note however that the emission extends over a range of frequencies, an effect called spectral line broadening.

From the Wikipedia

article on fluorescent spectroscopy it apprears that the energy of fluorescent

photons (absorbed and emitted) depends on energy stored in the "vibrational

states" of the material.

---------------------------------------------------------------------------------------------

Photosynthesis and light detection in the eye

A good question

is ---

At the

molecular level, does the eye detect solar photons in any way like a plant

does? As far as I can tell, the answer is no, photon capture and processing

in the eye is quite different from plants. When you think about it, this

is not too surprising. The plant is only trying to extract energy from

its photons. It has no interest in knowing where exactly the photons hit

or what their frequency is. Whereas the eye is detecting photons so it

can trigger nerve cells, and the eye also needs to sense where the photons

hits and in what part of the spectrum they lie so the brain is able to

form a color image.

A related issue, which I have written of elsewhere, is how the eye detects photons. Does the eye capture photons in (basically) the same way as a photosynthetic plant captures photons, or is the eye's cellular machinery totally different from plants? In photosynthetic plants the spectral response for efficiency reasons needs to reasonably match the spectrum of sunlight, but (as far as I can see) the spectral response of plants need not be particularly stable or predictable.

In fact the eye uses different molecules for light detection (chromophores) than plants do for photosynthesis. The animal eye detects light with retinal (general class: rhodopsins). While rhodopsins are not used by plants for photoshynthesis, they are used in some bacteria for (proton pumping) photosynthesis.

In the eye, of course, the desired final result is not energy stored in molecules, but the tickling of neurons. Of particular concern in the eye is what shapes the spectral response of the light detection, because the fidelity of color vision depends on the (ratio of the) eye's three cones cells spectral responses being both predictable and stable. The brain detects color by measuring the ratio of the output of the three cone cells. So how does the eye's cellular machinery achieve a predictable, stable, spectral response?

** One factor

that (I suspect ) is important to color fidelity is that the (light

sensitive) photopsins of the three types of cone cells of the retina differ

from each other in a few amino acids. When you want to keep a ratio stable

(to preserve color fidelity), a good way to do it is have the basic structures

be as similar as possible, having just the minimal changes needed to shift

the light absorption spectra.

-----------------------------------------------

Biologists 'explain' it (!)

-- Transduction

process by which the retina translates light energy into nerve impulses:

light strikes the chromophore,

which detaches from the photopigment,

which transforms the backbone,

which causes an enzyme chemical cascade,

which changes the ion permeability of the cell walls,

which alters the electric charge of the cell,

which alters its baseline synaptic activity,

which changes the pattern of activity among secondary cells in the retina.

The entire sequence, from photon absorption to nerve

output, is completed within 50 microseconds.

-- When the

chromophore is struck by a photon of the right quantum energy or wavelength,

it instantly changes shape (a photoisomerization to all-trans retinal)

which detaches it from the backbone. As a result the opsin molecule also

changes shape and in its new form acts as a catalyst to other enzymes in

the outer segment, which briefly close the nearby sodium ion (Na+) pores

of the cone outer membrane. This changes the baseline electrical current

across the cell body, which alters the concentration of a neurotransmitter

(glutamate) between the cone synaptic body and the retinal secondary cells.

As the number of absorbed photons increases, the secretion of neurotransmitter

decreases. (Note --- This mechanism might explain why it takes a 1,000

or so photons to get a cone cell to respond.)

-----------------------------------------------

Cell membranes in light sensitive cells

What the biologists

are trying to say (in the sections above) in their (overly) complicated

way is that it all comes down to flipping the cell membrane voltage. Photoreceptor

cells of the eye are a type of 'active cell' meaning they are able to quickly

(50 usec) change their membrane voltages, in much the same way that muscle

and nerve cells do, but in this case it's photons that trigger the cell

membrane voltage changes (via modulation of Na+ flows).

Like all mammalian cells the photoreceptor cells of the eye have pumps which keep the concentration of K+ inside the cell pumped up and the concentration of all other ions like Na+ and Ca2+ pumped down. In the dark the cell membranes have open channels for K+ (ungated) and Na+ (light gated) and Ca2+ (voltage gated), which have diffusion flows, the concentrations being maintained by a high density of K+/Na pumps.

Wikipedia calls

photoreceptor cells "strange" because in their dark state (normal state)

the cell voltage is 'depolarized' (due to the leaky Na+ ion channels) causing

the cell voltage to be (partially) collapsed at -40 mv. Photon hits trigger

the Na+ and Ca2+ channels to close allowing the cell voltage to

fully polarize (or hyperperpolarize, which just means to increase the potential)

to -70mv, set by the ungated K+ channels. It is this light induced voltage

change across the membrane that activates the next cell and sends an excitatory

signal down the neural pathway. The Na+ ion diffusion flow into a dark

cell is called the 'dark current'. In an (unspecified) time after a photon

hit, the Na+ channels reopen, the cell voltage drops back to its depolarized

-40mv, and the cell is ready for another photon hit.

----------------------------------------------------------------------------------------------------------------

-- In

humans (and many vertebrates) the chromophore molecule is retinal, so all

photopigment differences arise in the opsin backbone. This backbone determines

the ability of the photopigment, and the photoreceptor cell that contains

it, to respond to light.

-- carry genes for different amino acid sequences within the basic opsin backbone. These amino acid substitutions change the sensitivity of the photopigment to light.

-- • short

wavelength or S cones, containing cyanolabe and most sensitive to "blue

violet" wavelengths at around 445 nm.

• medium wavelength or M cones, containing chlorolabe and most sensitive

to "green" wavelengths at around 540 nm.

• long wavelength or L cones, containing the photopigment erythrolabe and

most sensitive to "greenish yellow" wavelengths at around 565 nm

-- The probability that a photon will photoisomerize a visual pigment depends on the wavelength (energy) of the light. Each photopigment is most likely to react to light at its wavelength of peak sensitivity. Other wavelengths (or frequencies) can also cause the photopigment to react, but this is less likely to happen and so requires on average a larger number of photons (greater light intensity) to occur. The electron structure of the photopigment is "tuned" to a preferred quantum energy in the photon.

-- The relationship between pigment photosensitivity and light wavelengths can be summarized as a relative sensitivity curve across the spectrum for each type of photopigment or cone. As the curve gets higher, the probability increases that the photopigment will be bleached or the photoreceptor cell will respond at that wavelength.

-- A plausible assumption adopted in colorimetry is that each type of cone contributes equally in the perception of a pure white or achromatic color. Each type is given equal perceptual weight in the visual system. In this type of display, the peak of each sensitivity curve is scaled up or down so that the area under the curve (equivalent to the total response sensitivity of each type of cone, pooled across all cones) is equal to some arbitrary value; the curves are then presented on a linear vertical scale.

.

.

(linear)

cone sensitivity, weighted by cone population

cone sensitivity weighted by equal area for colorimetry

Color vision evolution

Scientific

American in an April 2009 article on the Evolution of Primate Color

Vision makes a couple of interesting points. One is that most non-primate

mammals are dichromats, i.e. they have only two types of cones. As shown

in the Scientific American article their cone spectrums (which seem idealized)

are similar to human blue (S) and green (M), in other words they are missing

the human red. Some birds, fish and reptiles have four types of cones allowing

them to see into the ultraviolet. Primates like humans are trichromats.

Having just three color pigments is pretty unusual in the animal kingdom,

so this probably indicates we and primates share a common evolutionary

history.

Red (L) evololved from green (M)

In the 1980's

the genes for the three pigments were found, and they have now been sequenced.

A surprising result has been that the amino acid sequence of the green

(M) and red pigments (L) are "almost identical", and their genes sit side

by side on the X chromosome (sex chromosome). In contrast the blue gene

(S) sits on chromosome 7 and it codes a substantially different amino acid

sequence. Thus it looks like the red (L) primate pigment gene probably

came from the green (M) pigment gene (a duplication plus a small change),

and the authors trace this development through various monkeys. That the

red pigment (L) is just a variant on the green pigment (M). This looks

very believable when the cone spectrums are examined (see above).

Red is very close to green, unreasonably close if you were to sketch out an ideal set of spectrums. Red looks like green just shifted to a slightly longer wavelength. The difference between the peaks of green and red is only 30 nm (530 to 560 nm), a difference of only 5.5%.

-- The large differences in peak elevations (especially when compared to the population weighted cone fundamentals) imply that the S (small wavelength) cone outputs must be heavily weighted in the visual system far out of proportion to their numbers in the retina. This turns out to be true. In addition, the proportionally small overlap between the S cone curve and the L and M curves implies that short wavelength ("violet") is handled as a separate chromatic channel and is perceptually the most chromatic or saturated. These statements are also true. (Translation --- at both ends of the spectrum where only one type of cone has any significant output the brain can't really ratio, so subtle shades of color discrimination won't be possible.)

-- We might also suspect that the L (large wavelength) and M (medium wavelength) cones have a different functional role in color vision from the S cones, because they have very similar response profiles across the spectrum and lower response weights than the S cones. And this is also true: the L and M cones are responsible for brightness/lightness perception, provide extreme visual acuity, and respond more quickly to temporal or spatial changes in the image; the S cones contribute primarily to chromatic (color) perceptions. (Translation --- There are not enough S cones to contribute much to brightness, so its role must be to provide another signal to ratio, so improving color discrimination in the blue-green half of the visible spectrum, and possibly extending the visible spectrum a little deeper into the violet.)

cone & rod luminance (apparent brightness) to

the human eye vs frequency (log scale)

-- There are about 16 rods for every cone in the eye, but there are only about 1 million separate nerve pathways from each eye to the brain. This means that the average pathway must carry information from 6 cones and 100 rods! This pooling of so many rod outputs in a single signal considerably reduces scotopic visual resolution and means, despite their huge numbers, that rod visual acuity is only about 1/20th that of the cones.

Note also that if each rod is 128 times more light sensitive than a cone (meaning it triggers with 8 or so photons vs 1,000 for cones) and there are 16 times more rods than cones, then rod vision can operate at light levels 2,000 times lower than cone vision. This helps explains why the eye has such a wide dynamic range, about a billion to one (Wikipedia), far exceeding that of color film.

-- How does the mind "triangulate" from the separate cone outputs to identify specific colors? We obtain this picture by charting the proportion of cone outputs in the perception of a specific color. This results in a literal triangle, the trilinear mixing triangle, that contains all possible colors of light.

-- A single type of cone cannot distinguish wavelength (hue) from radiance (intensity). Modulating a single "green" wavelength of light between bright and dim, or alternating between equally bright "green" and "red" wavelengths, will produce an identical change in the output of a solitary L, M or S cone.

.

.

-- No matter how you represent it, color perception defined by cone fundamentals is highly irregular and imbalanced — not at all like the color wheels artists use!

** -- An assumption made in most studies of animal vision is that the photopigment absorption curves conform to a common shape, for example Dartnall's standard shape (right), which is plotted around the pigment's peak value on a wavenumber scale. This common shape arises from the backbone opsin molecule structure.

** -- Variations in the opsin amino acid sequence only shift the wavenumber of peak sensitivity up or down the spectrum; this does not change the basic shape of the curve, though it becomes slightly broader in longer wavelengths.

-- A third constraint (on the range of wavelengths the eye can see) has to do with the span of visual pigment sensitivity, because the sensitivity curves must overlap to create the "triangulation" of color. For Dartnall's standard shape at 50% absorptance, this implies a spacing (peak to peak) of roughly 100 nm: including the "tail" responses at either end, a three cone system could cover a wavelength span of about 400 nm. (human range is 300 nm -- 400 to 700 nm)

-- • At wavelengths below 500 nm (near UV), electromagnetic energy becomes potent enough to destroy photopigment molecules and, within a decade or so, to yellow the eye's lens. Many birds and insects have receptors for UV (ultraviolet) wavelengths, but they have relatively short life spans and die before UV damage becomes significant. Large mammals, in contrast, live longer and accumulate a greater exposure to UV radiation, so their eyes must adapt to filter out or compensate for the damaging effects of UV light. In humans these adaptations include the continual regeneration (grows like hair) of receptor cells, and the prereceptoral filtering by the lens and macular pigment. (Comment --- very interesting. Photoreceptor cells are continually being replaced.)

separating luminance (brightness) from hue (color)

responses in two cones

(defined as the sum and difference of their outputs)

-- All primates — monkeys, apes and humans — acquired a second set of contrasting receptor cells: the L and M cones, which evolved from a genetic alteration in the mammalian Y cone. There is only a small difference between the L and M cones in molecular structure and overlapping spectral absorptance curves, but it is enough to create a new color system called Trichromatic Vision.

-- The main benefit of trichromacy is that it creates a unique combination of cone responses for each spectral wavelength and unambiguous hue perception.

--Males are also split roughly 50/50 by a single amino acid polymorphism (serine for alanine) that shifts the peak sensitivity in 5 of these (10 known) variants, including the normal male L photopigment, by about 4 nm. (Comment --- interesting. Men split into two groups that see colors (very) slightly differently (4 nm is only a 1/2% to 1% difference).)

** --

From a design perspective, the most interesting question is why the three

cone fundamentals are so unevenly spaced along the spectrum and unequally

represented (63% L, 31% M and 6% S) in the retina. The answer is basically

is that it's an adaptation to reduce chromatic aberation. For example,

• An overwhelming population (roughly 94%) of L and M receptors, and a

close spacing between their sensitivity peaks, which limits the requirement

for precise focusing to the "yellow green" wavelengths.

• A sparse representation by S receptors in the eye (6% of total), which

substantially reduces their contribution to spatial contrast, and the nearly

complete elimination of S cones from the fovea (center of the retina),

where sharp focus is critical.

• Separation of luminosity (contrast) information and color information

into separate neural channels, to minimize the impact of color on contrast

quality.

Comment --- A reduction of chromatic aberation? The arguments seem to make some sense, but it seems to me that if the eye's lens is like a simple piece of glass (is it?), then the focus of white objects would be different across the spectrum of the L and M cones.

Super link for color vision is below (very well written

and comprehensive). All the text and figures above is from this link.

http://www.handprint.com/HP/WCL/color1.html#maxtriangle

--------------------------------------------------------------------------------------------------------

Ultraviolet light and health (4/08)

Slate had a short article on UV, sunscreen and skin cancer that had this

remarkable claim:

"How many people realize that the principle cause of melanoma is UV-A radiation, which isn't blocked by sunscreen at all? The Food and Drug Administration doesn't even consider UV-A in its labeling requirements for the product." (Slate 4/15/08 by Darshak Sanghavi)Is this true? I didn't initially have a clue. I didn't even know where in the spectrum UV-A lay. I did know from personal experience that sunscreen is very effective at stopping sunburn. I did a little research on the ultraviolet and here is what I found.

As many of the figures in this essay show the visible spectrum (in round numbers) is about 400 to 700 nm, but Wikipedia in their entry of the visible spectrum gives the range as 380 nm to 750 nm, just about an octave. The lens and cornea of the eye absorb strongly at wavelengths shorter than 380 nm. Above the visible range (in frequency) begins the ultraviolet, which is usually broken down into three regions called UV-A, UV-B and UV-C.

Apparently the wavelength limits of the ultraviolet ranges are rather informal, because they differ slightly in different references. Below are the numbers from Wikipedia -- 'Ultraviolet'. UV-A it turns out is the lowest energy ultraviolet, the ultraviolet region just above the visible.

visible 750 to 380 nm 1.97 ratio

UV-A

400 to 315 nm

1.27 ratio

(black light is 350 to 400 nm)

UV-B

315 to 280 nm

1.125 ratio (causes sunburn

& vitamin D)

UV-C

280 to 200 nm (100 nm)

1.40 ratio

(blocked by ozone layer)

Vacuum/Extreme 200 to 10 nm 20.0 ratio (blocked by air)

A couple of comments about the ultraviolet spectrum:

* From a human health perspective the ultraviolet range can be considered to be just UV-A, B, & C, because ordinary air is opaque below 200 nm wavelength with oxygen absorbing strongly in the 150 nm to 200 nm range (Wikipedia). UV-A, B, & C together are the octave in frequency (400 to 200 nm) just above the visible octave (750 nm to 380 nm). This in contrast to the upper range of the ultraviolet (called vacuum and extreme) which extends through a frequency range of 20, or more than 4 octaves.

* While the sun does emit energy in UV-A, B, and C range, Wikipedia says Wikipedia says "98.7% of the ultraviolet radiation that reaches the Earth's surface is UV-A. The lower end (in frequency) of the UV-A range is where UV 'black lights' radiate (350 to 400 nm).

* The UV-B range (315 nm to 280 nm) is very narrow (range of 1.125). Since this range is responsible for most sunburn, I suspect it has been defined biologically. And it is true that most sunscreens are only rated for blocking UV-B. Some newer 'full spectrum' sunscreens do have some reduction of UV-A.

* It is the center of the UV-B range (295 to 300 nm) that has the very positive biological effect of inducing the production of vitamin D in the skin. The conundrum of UV-B is this: "Too little UVB radiation leads to a lack of Vitamin D. Too much UVB radiation leads to direct DNA damages." (Wikipedia) The skin pigment, melanin, slows vitamin D production because it acts as a UV-B filter, absorbing the photons' energy into the vibrational modes of the molecule (called 'internal conversion'), thus converting it harmlessly to heat.

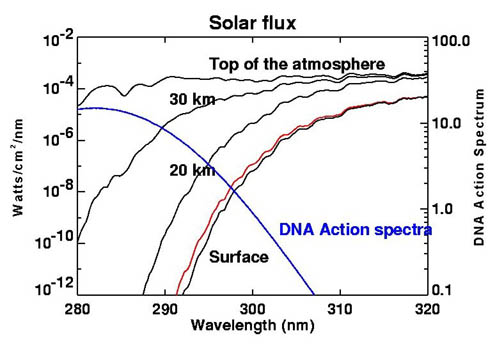

* Here's a figure from Wikipedia -'Ozone layer', which shows how the ozone layers absorbs in the UV-B range, and the frequency range over which DNA can be damaged. Notice the sharp cut off at 290 nm wavelength. This means virtually no UV-C from the sun reaches the surface of the earth.

Attenuation of UV-B ultraviolet due to ozone layer

(Wikipedia)

Notice --- Solar flux density at top of atmosphere

is (about) 1 w/m^2/nm.

This means that solar power in the UV-B range is about

35-40 w/m^2,

or about 3% of total solar power (1,370 w/m^2).

The figure shows there is a region smack dab in the middle of the UV-B range (292 nm to 308 nm) where DNA can be affected by radiation from the sun. This is in fact the spectrum region that sunscreen are optimized to block.

Wikipedia says, "97% to 99% of the sun's ultraviolet radiation is absorbed by the atmosphere's ozone layer", but it's unclear to me what this means. It might be in terms of energy because UV-B is partially blocked and UV-C and higher are totally blocked. In contrast UV-A sails right through.

* The association of UV-A and skin cancer is not too well known, but there is some evidence (as Slate suggests) that UV-A (might be) a major cause of some skin cancers. UV-A has the least energetic photons of ultraviolet, but UV-A is barely attenuated by the atmosphere and the sun probably radiates strongly in the UV-A range too with the result (as Wikipedia says), "98.7% of the ultraviolet radiation that reaches the Earth's surface is UV-A."

* "In the past UV-A was considered less harmful, but today it is known, that it can contribute to skin cancer via the indirect DNA damage (free radicals and reactive oxygen species). It penetrates deeply, but it does not cause sunburn. Because it does not cause reddening of the skin (erythema), it cannot be measured in sunscreen SPF testing" (Wikipedia)

* "The most deadly form of skin cancer - malignant melanoma - is mostly caused by the indirect DNA damage (free radicals and oxidative stress). This can be seen from the absence of a UV-signature mutation in 92% of all melanoma." (Wikipedia)

** Bottom line

--- Wikipedia pretty much confirms what Slate said -- Sunscreen rating

don't check UV-A and UV-A via an indirect mechanism could very well be

the cause of the most deadly skin cancer, melanoma.

--------------------------------------------------

John Hopkins professor, Jeremy Nahans, answers my

email

(update 4/22/09)

April 2009

Scientific American had an article on primate color vision by Jeremy

Nathans (John Hopkins) & Jerald H. Jacobs (Univ of California).

I was able quickly to find the email address of Jeremy Nathan, who specializes

in color vision, and asked him about the shape and stability of the spectral

response curves of cones. Dr. Nathans was nice enough to answer me.

He explains that the shape of the spectral response is (as I guessed) a thermal effect, meaning (I think) that vibrations of the atoms at body temperature modify considerably (says Dr. Nathans) the energy that the photon need supply to free an electron. For answers to my other questions he referred me to this 100 dollar textbook:

"The First Steps in Seeing" by Robert W. Rodieck

I bought this

book, when I found I a good quality used copy via Amanzon for about 35

dollars, and I am now reading it. It's an introductory text (published

in 1998) with a wealth of detail (with numbers!, probably because Rodieck

was an EE says Nathan) on vision at the cell and molecular level. My plan

is to pull together an engineering type block diagram to summarize it.

---------------------------------------------

Nathans on evolutionary origin of tri-color vision

Turns out

Nathans is an MIT graduate (79) and after the Scientific American article

he came to MIT and gave a talk. He also just won a prize. Here's a link

to a video of his MIT talk (1:07 hour) on the evolution of tri-color vision.

http://mitworld.mit.edu/video/669

----------------------------------------------

(Here's my email to him, followed by his reply.)

On Apr 23, 2009, at 2:30 PM, Donald Fulton wrote:

Dr. Nathans

Enjoyed your recent vision article in Sc Am. I'm hoping you can help me

understand a vision/color problem that has always bothered me. I understand

that the brain 'sees' color using the 'ratio' of the outputs of the S,M,L

cone cells (with some post processing). What I would like to understand

is how the shape, gain (amplitude) and center of the spectral responses

of the three cone cells (appear to be) so accurate, stable, and reproducible.

What is it that sets the 'shape' of the cone spectral responses? Is this a quantum mechanical effect? Is the spectral spreading about the center a thermal effect due to atomic vibrations? And what about the 'gain' of the retina cells and associated nerve firing rates. Don't nerve responses vary a lot between individuals? Here I see that the fact that a ratio is being used might be very effective at removing this soure of color error.

And/or is the brain reducing color errors by some sort of calibration procedure? I am a control engineer and know that in computer controlled machines sensor errors (gain and offset errors) are routinely corrected for by computer, but this is only possible because at final test of the machine the errors of the various sensors are isolated, measured, and stored away. Does the mother say to the baby this is red, green, blue and in this way the baby's brain calibrates the cones output ratios? Seems hard to believe.

Thanks

Don Fulton

----------

Don,

Great questions.

Can I refer

you to the following excellent book for insight into these issues, which

are nontrivial:

The First

Steps in Seeing: Robert W. Rodieck. Incidentally, Rodieck trained

as an electrical engineer before going into vision research.

It is available at Amazon.com.

The only question that I can answer briefly without short-changing the science is:

What is it that sets the 'shape' of the cone spectral responses? Is this a quantum mechanical effect? Is the spectral spreading about the center a thermal effect due to atomic vibrations?It is a photon absorption that boosts a pi-electron from ground to excited state (governed of course by quantum mechanics). The exact shape reflects the vibrational energy of the ground and excited states and the degree of vibrational excitation, which is considerable at room temperature or body temperature (the Franck-Condon principle), just as you surmised.

All the best,

Jeremy

Jeremy Nathans

Molecular Biology and Genetics

805 PCTB

725 North Wolfe Street

Johns Hopkins Medical School

Baltimore, MD 21205

Tel:410-955-4679

FAX: 410-614-0827

e-mail: jnathans@jhmi.edu

=============================================================

Vision at retina level

Info in this

section is based on my readings in a great (introductory) 1998 vision textbook

(below). This book was recommended to me (via email) by vision researcher,

Prof Jeremy Nathans of John Hopkins, who in Mar 2009 coauthored an article

on color vision in Scientific American, and who followed up with a talk

at MIT about color vision upon winning a chemistry prize. It's typically

a hundred dollar type book, but I was able to get a clean used copy for

about 35 dollars at Amazon.

First Steps in Seeing by Robert W. Rodieck

Opic nerve (ganglion cell axons) is off center (toward

nose).

Section of retina directly behind center of lens is

called the fovea.

Fovea contains only L amd M cones

Retina blood vessels are excluded from fovea

source -- Wikipedia fovea

Retina

The retina

is about 0.4 mm thick (400 microns) and is composed of four layers of cells,

all different. From a common sense viewpoint the retina is built backwards.

It is the outside layer that contains photosensing cells (rods and L, M,

S cones), and light sensing within the rods and cones is at the outside

edge, meaning the light must pass through all four layers of cells to reach

the light sensitive protein. The center of the retina (fovea) is all cones

and away from the center it's mostly rods. Rod and cone cells are long

and thin and tightly packed together. The outside 25% or so of rod and

cones 100 micron length is a huge stack (1,000) of membranes filled with

the light sensitve protein rhodopsin.

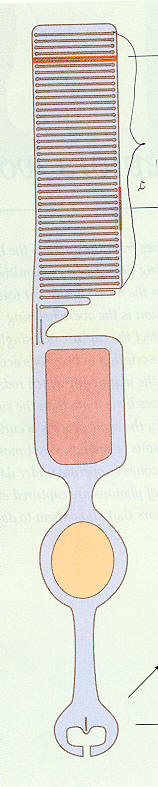

Rods and cones really stack!

In photosynthesis

the thykaloid membranes, where the light capture complexes reside, are

arranged in stacks of 20 or so within the chloroplasts of plants, but in

the retina stacking is extreme. Rods and cones are long narrow cells (75

to 100 microns long) that have at their far ends a stack spanning the width

of the cell that contains about 1,000(!), one thousand, light capturing

membranes. The membranes are chock a block full of the rhodopsin proteins

(80,000 per layer or about 80 million per cell). Photon activation of a

single rhodopsin, out of the 80 million in the cell, can be detected in

rods!

Rod cell structure

(upper) 'Outer segment' has 1,000 membrane layers!

1,000 layers absorb 50% of light and 2/3rd of absorbed

light photoisomerizes retinal

(lower) 'Synaptic gap' signals bipolar cells via voltage

changing glutamate concentration

(pink is inner segment with mitochondia, yellow oval

is cell nucleus)

Light actually comes in 'backwards' (from bottom)

(source -- scan from Rodieck book)

Amplification

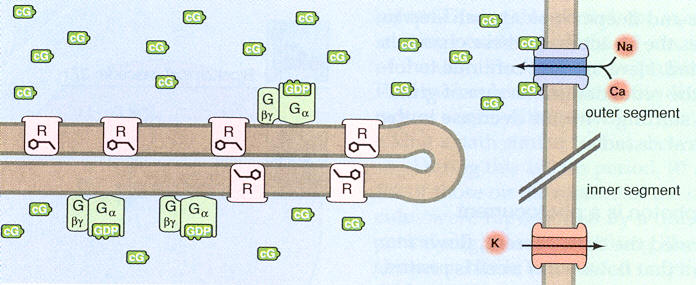

Curiously

the rhodopsin proteins are not a fixed within the membranes. The membranes

are like fatty layers, and the rhodopsin proteins move around rapidly within

them due to browian motion. This is important because it provides a major

amplification mechanism. A photon activated rhodopsin in 50 to 100 msec

will travel all around the membrane and activate 1,400 or so G molecules

in the membrane, so this is a x1400 multiplication. Activated G molecules

(via GMP) in turn convert cG molecules in fluid that bump into the membrane

into another form, thus reducing the local concentration of cG in the fluid.

Adenine and guanineFor N+ and Ca2+ input channels in the outer cell membrane to be open (dark state) several (3 or 4) cG molecules need to be attached to the channel. When local cG concentration in fluid falls, some of the local cG gate Na+ and Ca2+ input channels have less than three cG attached and close. This provides an amplification of x3. The reduction of inflowing +ionic current (Na+ and Ca2+) leads to a small fall in cell voltage, which affects the glutamate concentration in the synapatic region which is sensed by the next cell in the path (bipolar cells).

The G/cG process in the rod/cones is related to the energy carrier pair ADP and ATP in photosynthesis where phosphate groups are popped on and off. It turns out that 'A' in ATP stands for 'adenosine', which is the DNA base (rung) adenine attached to a sugar. The same sort of phosphate on/off mechanism also happens in a molecule derived from the DNA base 'guanine'. GMP is part of a family which includes GTP, the guanine analogue of ATP. (GTP like ATP is triphosphate version and GMP like AMP is the monophosphate version).

From an evolutionary point of view there is a huge advantage if an eye cell can sense, meaning capture and signal the next cells in the path toward the brain, the capture of a single photon. Rods (and probably cones too says Rodieck) do reliably sense single photons. About half the light passing through the 1,000 layer stack is absorbed and half transmitted. The fraction of photons absorbed that are usefully sensed, technically those that photoisomerizes a rhodopsin molecule, is about 68%. The photoisomerized protein, using the light energy from the captured photon, straightens a bend angle in the enclosed retinal molecule. This in turn slightly changes the size of the rhodopsin molecule, which produces a reduction in the concentration in a circulating small molecule (cG), which leads to a closing of ion channels in the membrane, which leads to a small change in cell membrane voltage (delta -1 mv = -35 mv to -36 mv), which through a glutamate signal is sensed by the next cells in the pathway (bipolar cells).

Without doubt there is a huge difference in the level of light and dynamic range of light over which the systems must work. Photosynthesis works well at fairly high light levels and only over a fairly limited light dynamic range. The dynamic range of the eye is huge (10^15). Photosynthesis light capture is organized around the reaction center consisting of just a few hundred molecules. For full Z cycle photosynthesis of plants two photons must be caputured by a single reaction center with some some capture time window (few msec?). In a single rod it takes light levels about 100,000 (= 2 x 85 min threshold/100 msec) higher than the one photon capture threshold for two photons to arrive within the 100 msec 'shutter' time.

A photon passing through a retina photosensor cell (rod or cone) passes through 1,000 stacked layers containing a total of something like 80 million (= 80,000 rhodopsin/layer x 1,000 layers) light capture proteins. Absorption and 'fixing the bend' (photoisomerization) in any of the 80 million rhodopsin proteins in the cell causes the membrane voltage of the whole cell to go slightly more negative (technically hyperpolarize) pulsing from -35 mv (dark) bias to -36 mv (-1 mv delta) for 100 msec or so, called the 'shutter' time. The 1,000 layer stack makes the probability of absorption of a photon passing through about 50% and about 2/3rds of absorbed photons photoisomerize a rhodopsin molecule, meaning these are the photons that are sensed.

Photoisomerizing electron capture --- one electron in 10,000+A second way the wide dynamic range of of retinal photon capture is arranged is by having two types of capture cells: rods and cones. While both can sense a single photon, rods begin to saturate when the capture rate reaches about 100 photons/sec, whereas either a high pass action or AGC (automatic gain control) feedback desensitizes cones at high light levels so they don't saturate and continue to sense light changes even at high steady photon capture rates of (up to) 1,000,000 photons/sec (per cell).

About 2/3rd of photons absorbed in the (outer) stack of rods photoisomerizes a rhodopsin molecule, meaning it is absorbed by a key electron bond in the enclosed retinal molecule and it straightens the bend in the retinal. Think about how remarkable this is.Even ignoring the electron bonds in structural molecules of the membrane, each rhodopsin protein in the membrane has 348 amino acids. This means that each rhodopsin molecule has more than 10,000 valence electrons (= 348 amino acids x 25 atoms/amino acid x 2 valence electrons/atom), yet the protein is only photoisomerized if a particular electron of the 10,000+ valence electrons absorbs the photon!

This must be a case of tuning. In the whole protein with its 10,000 plus valence electrons only this one electron must capable of being ionized by the few ev of energy in visible light photons. In a sense the whole molecule (& whole three cell layer in the retina) must be transparent except for this one opaque bond.

Comparision between retina and photosynthesis light

capture

There

appears to be less molecular machinery involved in retina photon light

capture than for photosynthesis. For example in rods and cones membranes

there is only one type of light capture protein, no electron transport

chain, no pumping of protons, no complex of proteins in the fluid assembling

sugar. My guess is that density of light capture proteins is probably

higher in rod and cone membranes and in thykaloid membranes.

A similarity between photosynthesis and eye photon capture is that the energy of the photon drives the process. In the case of photosynthesis it provides all the energy and in the case of the eye it drives the membrane voltage change and the molecule cascade that resets the bend in the rhodopsin molecule, which takes about two minutes.

Capture of a single photon in a rod

Overview

As in most

cells the Na+ ion concentration inside rods and cone cells is kept low

by active (ATP powered) Na+/K+ pumping, and Ca2+ concentration is kept

low inside by Na+ concentration powered exchangers. Each rod cell has a

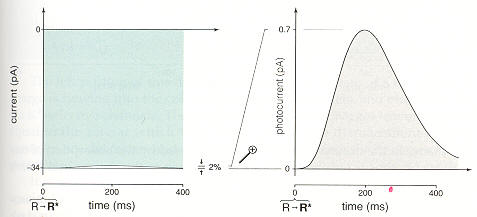

continuous

(dark) looping current flow of 34 pa of Na+ and Ca2+ ions that flow down

the concentration gradients of open channels into the cell and are pumped

out again. One captured photon causes a small (0.7 pa or 2%) transient

reduction in the inward current, which causes a small (-1mv) transient

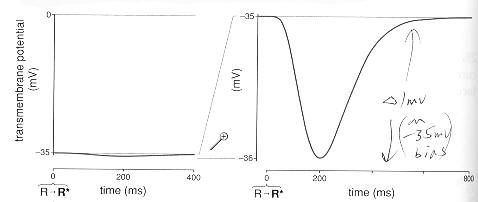

in the cell membrane voltage (-35 mv to -36mv), which in turns modulates

the glutamate signal to next cells in the pathway (bipolar cells). Below

is the sequence in detail when a photon is captured.

Capture of one photon

When one rhodopsin

molecules in a rod (or cone) is photoisomerized by absorbing one

photon, about 2% of the total N+/Ca2+ channels in the cell close. The process

is that the single straightened protein while moving through the membrane

actives about 700 of another molecule (G molecule), and these molecules

modify a small molecules (cG) in fluid that is needed to keep the N+/Ca2+

channels open. The result is that the local concentration of cG molecules

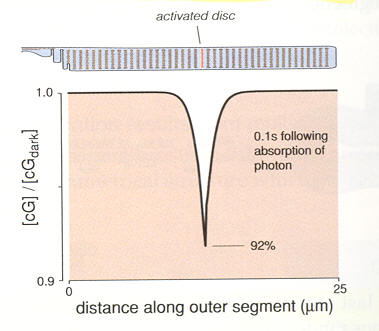

falls and that closes the local N+/Ca2+ channels. Here's the local reduction

in cG concentration in fluid along the 25 micron length of the 1,000 disks

due to the capture of one photon (in a rod).

Local reduction in cG concentration 100 msec after

capture on one photon in a rod

(cG molecules keep Na+ and Ca2+ channnels open,

so falling cG concentration reduces inflowing Na+

and Ca2+ ionic current)

(source -- scan from Rodieck book)

With the average cell ion input current now reduced by about 0.7 pa (34 pa to 33.7 pa) for 100 to 200 msec and the outward pointing pumps and exchangers still running at the 34 pa bias level there is net loss of +charge from the inside of the cell driving its membrane voltage more negative by about 1 mv (-35 mv to -36 mv). The jargon for the light induced change in cell membrane voltage is hyperpolarize, meaning the cell voltage already negative (when dark) is driven more negative by light.

Rod cell 'one photon' membrane ionic current pulse

(source -- scan from Rodieck book)

Rod cell 'one photon' membrane voltage pulse

(source -- scan from Rodieck book)

Note, up to a point, each captures photon in the 'shutter' time of 100 msec or so drives the (peak) cell voltage more negative. It crudely a linear system with the change in cell membrane voltage (from -35 mv dark bias) tracking the photons absorbed in the 100 msec shutter time. The cell membrane voltage goes more negative by about 1 mv/photon because each photon statistically (depends on the layers where the photons get absorbed) shuts down another 2% of the Na+/Ca2+ channels. When the light reaches the level of 50 to 100 photons absorbed in 100 msec, the inward Na+/Ca2+ ion current gets completely cut off. This is the light level at which rods saturate.

Rod/cone to bipolar cells

Like charges

repel so the local charge imbalance equalizes almost instantly (100 psec)

across the whole of the cell membrane capacitance. This charge spreading

across the cell membrane capacitance is important because it is how one

end of the long narrow cell (rod or cone) 'talks' to the other end. The

end far from the light absorbing layers is where the gaps are to the next

molecule (bipolar molecules) on the way to the brain. The communication

across the rod/cone to bipolar gap is via changes in small molecule concentrations

is the fluid with concentration change roughly linear with the rod/cone

voltage change. The result is that the a bipolar's cell voltage changes

now (approx) linearly track the cell voltage changes of the rod or cone

cells to which it is coupled.

Bipolar to ganglion V-F cells

The bipolar

cells in turn signal the ganglion cells, which have very long (an inch

or two) axons that extend from the eye to the brain. This axon bundle is

the optic nerve. It is at the ganglion cells that the linear cell voltage

changes (vs light) get converted to a frequency change. In other words

ganglion cells are voltage-to-frequency (V-F) converters, but there is

tricky problem here. If only one type of ganglion cell were to be used

it would have to biased at a fairly high frequency so positive and negative

voltage changes could modulate the frequency up and down. V-F converters

are better suit for magnitude (or unipolar) signals where an increase in

the magnitude of the voltage can increase the frequency from zero (or near

zero). This is what is done in the vision system.

On/Off ganglion cells

At the bipolar

level each rod/cone changes from the bias are split into two paths. An

increase in cell voltage (from bias) goes to On bipolar and On

ganglion

V-F and reductions , and a reduction in cell voltage goes to Off

bipolar and Off ganglion V-F. The V-F ganglion cells run in dark

at a low (noisy) firing rates, so there is some crossover between the On/Off

channels. Thus the brain gets from each group of rods and cones sign and

magnitude information (from a bias value) on a pair of axons that it must

combine and (in some sense) do a frequency (rate) to signal conversion.

In addition in ways not very well understood some bipolar and amigine cells

in the retina do specialized tasks like hor or vert edge detection, and

these signals are V-F converted at ganglion cells and sent to the brain

too.

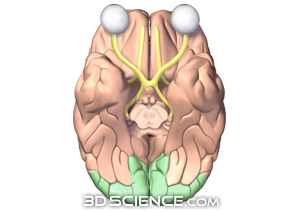

Path to brain

The figure

below shows the visual cortex of the brain (in green), which is where the

retina ganglion signals terminate in a thin layer that maps out the retinal

location of the rods and cones. Notice anything interesting? The visual

coretx of the brain is in the back of the brain about as far from

the eyes as possible! Weird. The optic nerve bundle that leaves the retina

consists of the extended axons of ganglion cells in the retinia. The axons

of the retina ganglion cells actually extend all the way from the retina

to the LGN (deep in the brain), which is about half way to the visual cortex.

brain visual cortex in green (http://www.3dscience.com)

The link from

retina to brain visual cortex is actually done in two hops -- two

cells in series. All the ganglion retina cells axons reach (probably two

or three inches) to the LGN (lateral geniculate nucleus, actually there

are two LGN, a right and left.) LGN's may do a little processing (no one

really knows), but it appears that it acts principally as a relay station.

Each optic nerve axon has its own LGN relay axon which extends from the

LGN (two or three inches) to the visual cortex. The name optic nerve at

the LGN changes to 'optic radiation' as it runs from LGN to the visual

cortex. The firing of each LGN axon mirrors the firing it receives from

its retina ganglion/optic nerve axon it receives from.

Axons -- an aside (Wikipedia)------------------------

Axons are in effect the primary transmission lines of the nervous system, and as bundles they help make up nerves. Individual axons are microscopic in diameter (typically about 1um across), but may be up to several feet in length. The longest axons in the human body, for example, are those of the sciatic nerve, which run from the base of the spine to the big toe of each foot. These single-cell fibers of the sciatic nerve may extend a meter or even longer.

Like all cells the photoreceptor cells of the eye have pumps which pump up the concentration of K+ (inside the cell) and pump down the concentration of all other ions like Na+ and Ca2+. In the dark photoreceptor cell membranes have open channels for K+ (ungated) and Na+ (light gated off) and Ca2+ (voltage gated). Since these channels are to some degree (in the dark) open, the ions diffusing (and drifting) down their channels are recycled and concentrations maintained by a high density of K+/Na pumps.

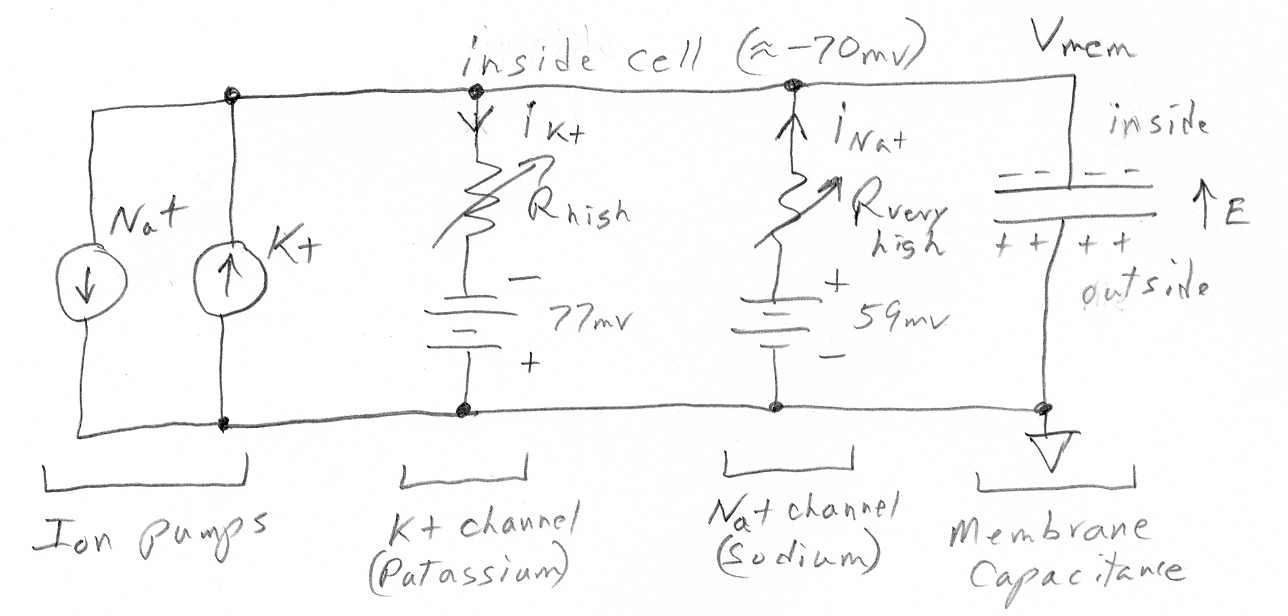

Wikipedia calls photoreceptor cells "strange" because in their dark state (normal state) the cell voltage is 'depolarized' (due to the leaky Na+ ion channels) causing the cell voltage to be (partially) collapsed at -40 mv. Photon hits trigger the Na+ and Ca2+ channels to close allowing the cell voltage to fully polarize (or hyperperpolarize, which just means to increase the potential) to -70mv, set by the open, ungated, K+ channels. It is this light induced voltage change that activates the next cell and sends an excitatory signal down the neural pathway. The Na+ ion diffusion flow into a dark cell is called the 'dark current'. In an (unspecified) time after a photon hit, the Na+ channels reopen, the cell voltage drops back to its depolarized -40mv, and the cell is ready for another photon hit.Nerst potentials and ionic channels resistance model

Photons modulate the cell voltage by modulating the R in the Na+ channel.

In rods the voltage change (from dark) is about -1 mv/photon (-35 mv to -36 mv). The peak cell voltage change (from dark bias voltage) is substantially linear with light, meaning the peak voltage change tracks the number of photons captured in the 100 msec shutter time. When photon capture rates reach 50 to 100 photons/100 msec, substantially all the Na+ channels pulse close, so the cell voltage (magnitude) increase saturates near the K+ nernst potential. The dynamic range of rods is quoted as about 100:1, but the saturation curve is a little soft, it slowly bends over with the 70% point at about 100 photon/shutter time with hard saturation at 500 photon/shutter time.

How to we see blue so well? -- a query

Rodieck says the center of the eye (fovea), where we see with high resolution, contains no S (blue or short wavelength) cones, just L and M cones. L and M cones give basically red/green vision. How then do we see blue so well? I am not aware that a blue object in a scene is less clearly seen than any other color. I do remember that in color televison blue is sent with less bandwidth than red and green. However, here is a closeup of a TV shadow mask and on every row if you count three from any color, you get back to the same color, so the 'area' allocated to R, G, and B is the same.

------------------------------------------------------------

Dozen or so slides on color vision --- example of how

various spectra produce same color (#50 to #60 or so)

http://courses.washington.edu/psy333/lecture_pdfs/chapter7_Color.pdf